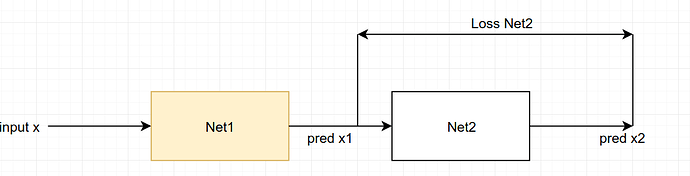

I have two networks: net1 and net2 and an input x. I want to feed the input x to the net1 to generate the pred x1. Then, the pred x1 is fed to the network 2 to generate pred x2.

I want to freeze network net1, while train the net2. The loss is computed as the mse between pred x1 and pred x2. I shows my implementation but I checked that the weights of net1 is still updated during training. How can I fix it?

net1=Model_net1()

net1.eval()

net2=Model_net2()

net2.train()

for param in net1.parameters():

param.requires_grad = False

with torch.no_grad():

pred_x1= net1(x)

pred_x2= net2(pred_x1)

loss = mse(pred_x1, pred_x2)

loss.backward()

optimizer.step()