Thank you @ptrblck.

For your question,

-

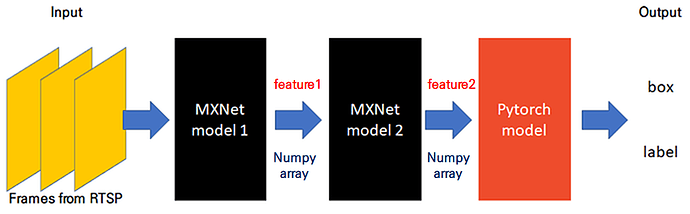

I just get numpy.array features from MXNet1 and MXnet2 as a following figure.

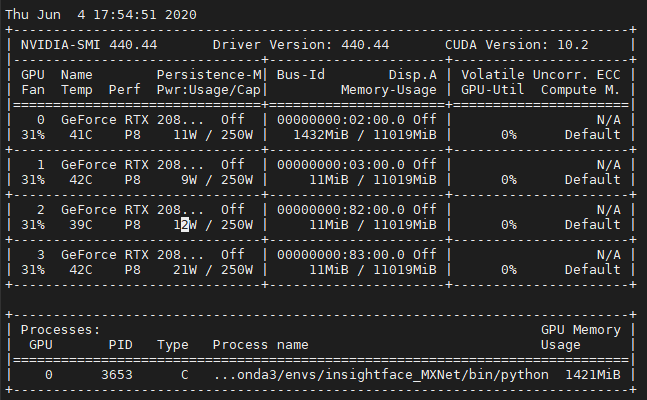

I put all the models on the same GPU (GPU:0) as you can see below.

-

Input size is not that big. Using cv2 based library, I get frame using rtsp and resize the frame into (270, 470, 3) and throw it to the model.

-

I need to measure the processing time at each step. Is it recommend to use time.time() before and after the code block?