Hi, all.

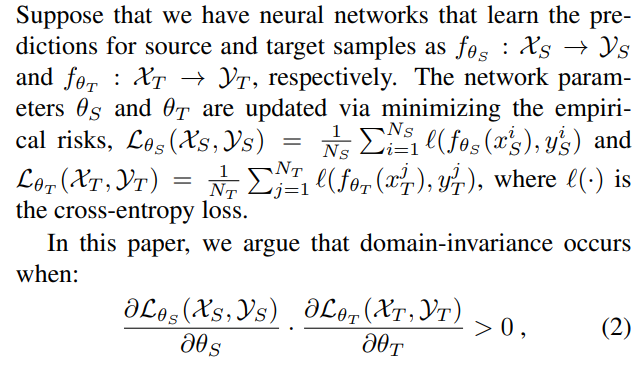

I’m trying to implement the loss like (2) in the figure.

But I don’t know how to get gradient for the loss.

Hi, all.

I’m trying to implement the loss like (2) in the figure.

But I don’t know how to get gradient for the loss.

Hi,

From reading that paragraph, I don’t think (2) defines a loss. It is just an inequality.

In general, if you want the gradients for your parameters wrt your loss in a differentiable manner (to be able to compute gradient wrt to these gradients), you can do:

grads = autograd.grad(loss, model.parameters(), create_graph=True).

@albanD Thansk for your quick reply.

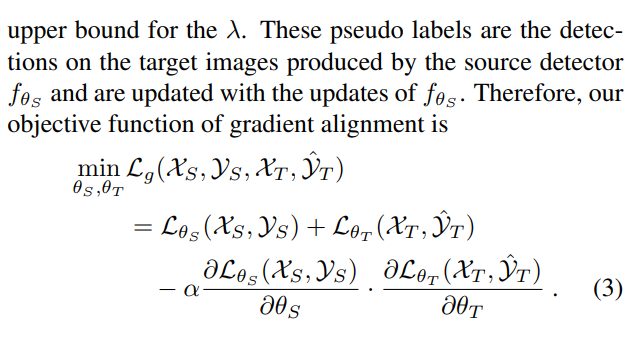

Here is he full contents.

According to your answer, I tried. but have some problems.

I’m not sure how to use autograd.grad.

With this codes, allow_unused=True and retain_graph=True is required.

And got (None, None)

optimizer_g = optim.SGD(list(encoder.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

optimizer_f = optim.SGD(list(classifier.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

...

loss = criterion(outs, labels)

optimizer_g.zero_grad()

optimizer_f.zero_grad()

loss.backward(retain_graph=True)

grads = autograd.grad(loss, classifier.parameters(), create_graph=True, allow_unused=True)

print(grads)

optimizer_g.step()

optimizer_f.step()

These gradients should be used to update the loss before doing the final .backward():

optimizer_g = optim.SGD(list(encoder.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

optimizer_f = optim.SGD(list(classifier.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

...

loss = criterion(outs, labels)

# Do you have one loss or one for each?

# If so, give only the corresponding loss for each model and remove the allow_unused

grads_f = autograd.grad(loss, classifier.parameters(), create_graph=True, allow_unused=True)

grads_g = autograd.grad(loss, encoder.parameters(), create_graph=True, allow_unused=True)

# I guess the product in the formula is a dot product?

grad_prod = 0.

for gf, gg in zip(grads_f, grads_g):

grad_prod += (gf * gg).sum()

final_loss = loss - alpha * grad_prod

optimizer_g.zero_grad()

optimizer_f.zero_grad()

loss.backward()

optimizer_g.step()

optimizer_f.step()

@albanD Sorry, I still have trouble. How can I fix this in this situation?

- Network structure:

input1 -> encoder1 ->

shared_encoder ->classifier

input2 -> encoder2 ->

optimizer_g = optim.SGD(list(encoder1.parameters()) + list(encoder2.parameters())+ list(shared_encoder.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

optimizer_f = optim.SGD(list(classifier.parameters()), lr=0.1, momentum=0.9, weight_decay=5e-4)

...

out1 = encoder(input1)

out1 = shared_encoder(out1)

out1 = classifier(out1)

out2 = encoder(input2)

out2 = shared_encoder(out2)

out2 = classifier(out2)

loss1 = criterion(outs1, labels)

loss2 = criterion(outs2, labels)

loss = loss1 + loss2

grads_f = autograd.grad(loss, classifier.parameters(), create_graph=True, allow_unused=True)

# How to add encoders?

grads_g = autograd.grad(loss, encoder.parameters(), create_graph=True, allow_unused=True)

grad_prod = 0.

for gf, gg in zip(grads_f, grads_g):

grad_prod += (gf * gg).sum() # Inner product

final_loss = loss - alpha * grad_prod

optimizer_g.zero_grad()

optimizer_f.zero_grad()

final_loss.backward()

optimizer_g.step()

optimizer_f.step()For the dot product in the formula to make sense, both the classifier and the encoder must have the exact same parameter structure. So you should just make sure to give the encoder is a way that you get the right gradients.