Hello,

I have a main.py where several models (U-net, GAN, …etc) are defined and used inside. I cannot share the actual code but I can show the overall structure of the code:

- Load the input dataset

- Function1 to preprocess the dataset

- Class1 that defines the convolutional network using PyTorch

- Load a pre-trained weight to Class1 and create a network model.

- Class2 that defines the convolutional network using PyTorch

- Load a pre-trained weight to Class2 and create a network model.

- Class3 that defines the convolutional network using Class1 and Class2

- Train the network defined by Class3

- Save the results from the training

However, because everything is defined in one file, this code is too long. To make it more reader-friendly, I defined the functions and classes in separate files so that I can import them like below:

# main_new.py

# Set seed for reproducibility

seed = 0

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

torch.cuda.manual_seed_all(seed)

np.random.seed(seed)

random.seed(seed)

# Load packages

from tools.file1 import Function1

from tools.file2 import Class1, Class2, Class3

###################################################

# Processing using Function1, Class1, Class2, Class3

###################################################

This new code runs without any errors. However, the problem is, the original main.py and the new main_new.py give different results. I want to get the same results as that of the original one to convince that I’m not messing up anything during the modularization. Note that both main.py and main_new.py are deterministic, so I don’t think the problem is due to the randomness of PyTorch libraries.

- Can the modularization (defining classes and functions in separate files) change the results?

- If so, is there anyway to prevent that?

I am using Python=3.7.10 and PyTorch=1.7.1.

Can you elaborate on what you mean by different results?

Is it different accuracy or loss etc?

AFAIK, this won’t be the case.

Can you try to pass the same example through both networks and check the output layer-by-layer and see if they are equal?

@InnovArul I used the following function (credit: Check if models have same weights) to compare the trained weights from the two codes layer-by-layer. The weights and biases were totally different even when the inputs are the same.

import torch

def compare_weights(path_save1, path_save2):

weights1 = torch.load(path_save1, map_location=torch.device('cpu'))

weights2 = torch.load(path_save2, map_location=torch.device('cpu'))

models_differ = 0

for key_item1, key_item2 in zip(weights1.items(), weights2.items()):

if torch.equal(key_item1[1], key_item2[1]):

pass

else:

models_differ += 1

if (key_item1[0] == key_item2[0]):

print('Mismatch found at', key_item1[0])

else:

raise Exception

if models_differ == 0:

print('Weights match perfectly!')

Are these models similar performance-wise/loss-wise?

Can you check if you are starting with the same model weights in both of them?

In my understanding, modularization won’t introduce the change in results. but you have to make sure the old file and new file follow the same steps in the same order, so that weight initialization doesn’t differ.

can you print and check the values instead of torch.equal?

For comparing float tensors, you may need to use torch.allclose().

Answers to your comments:

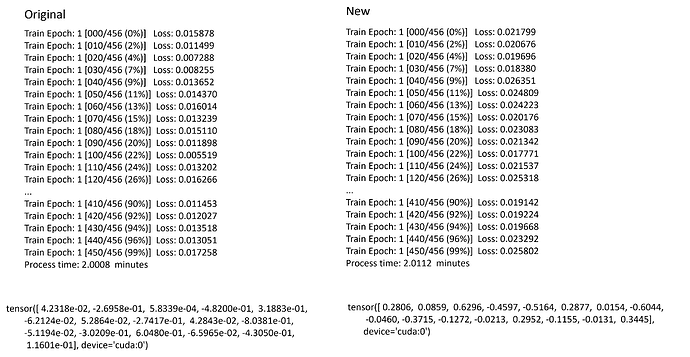

- Their training loss differ. See below.

- I’m sure the two codes are using the same initial weights since I’m giving the pre-trained weights explicitly to the models.

- I printed one of then tensor values of the trained weights. See below.

A few things I found:

-

Until the training starts, the two codes are using the same random stream.

In the code before the training phase, I added the following:

print('--Test--')

print(torch.bmm(torch.randn(2, 2, 2).to_sparse().cuda(), torch.randn(2, 2, 2).cuda()))

The results from the two codes are the same, so until this point, the random number streams must be consistent.

-

The batch data chosen for each iteration in the two codes is also the same.

At every iteration, I generate a set of random indices to pick the batch data from training dataset. I printed out the indices and confirmed that the same set of indices are used in the two codes.

-

However, in the training phase, the inconsistency starts from the very first iteration. The image below compares the training loss and trained weights of the two codes:

The new code gives larger training loss for some reason…

Sorry, you were right! After checking the model again, I realized that one of the model weights was not loaded correctly! After correcting this, the problem has been solved!