Hello,

Can you please help with how to get vocabulary x vocabulary weight matrix from multi headed attention?

I have timeseries data and I am using attention to predict the next element/token in the sequence.

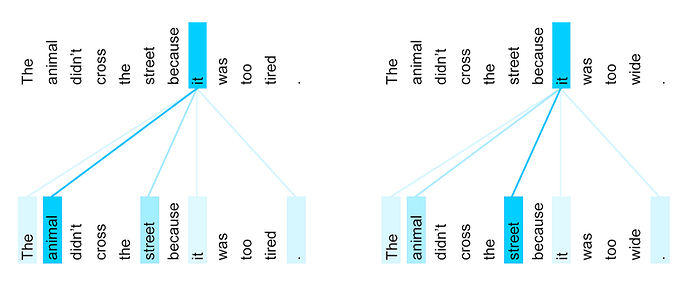

E.g. input sequence: T1 - T2 - T3 - T4 - T3 - T5, where each Ti is a token. I have n such tokens in the vocabulary. Each token is represented with 56 dimensions. The attention layer takes such a sequence as an input and returns attention weights and attention outputs (as shown here).

How do I get the nxn i.e. vocabulary_size x vocabulary_size (or embedding x embedding) matrix as an output? Each entry in the matrix indicates the importance of the particular vocabulary element compared to other vocabulary elements?

Specifically, the model is as follows,

model summary BuildModel(

(input_multihead_attn): MultiheadAttention(

(out_proj): Linear(in_features=56, out_features=56, bias=True)

)

(fully_connected): Linear(in_features=56, out_features=28, bias=True)

)

Multiheaded attention layer returns batch_size x sequence_length x sequence_length (length of S) weight matrix.

The sequence_length x sequence_length matrix is generated from a batch of sequences. Hence, first position in the sequence may correspond to multiple tokens (e.g. T1 for sequence 1 and T2 for sequence 2). I am wondering, how do I generate n x n matrix (n is the total number of tokens) from this attention weight matrix?

Thank You,