I want to give Pytorch layers names so that I can easily select them. Right now, I am selecting them based on index, which means I have to be sure of where they are. Is it possible to just give them names so that I don’t have to keep checking for their indices?

Updates on this? It would be nice to have the possibility to assign names to layers, instead of having a name automatically assigned. Isn’t it just a matter of modifying nn.Module base class to include a “name” parameter? Or are you worried about possible name conflicts in case the assigned name is not unique?

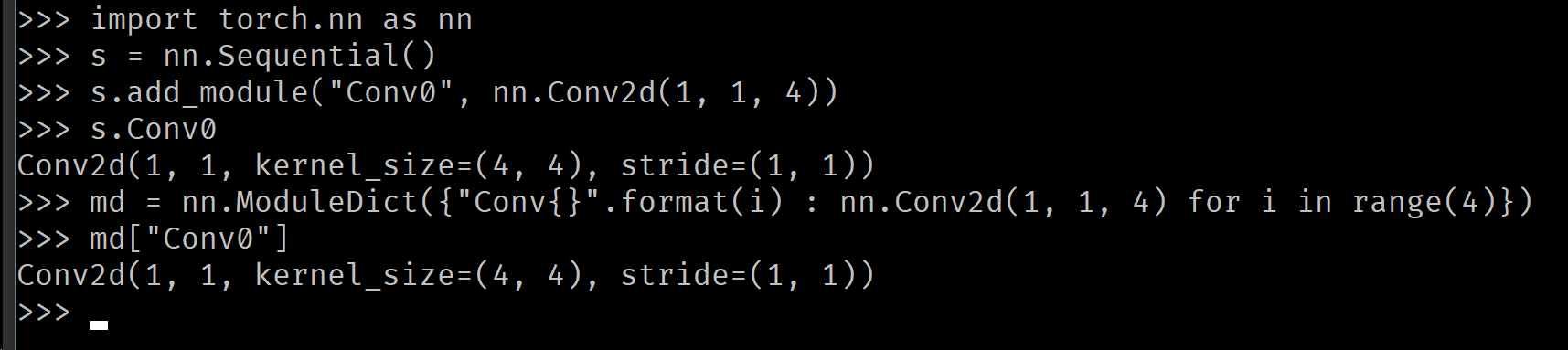

you can easily do:

self.my_name = nn.Linear(...)

what is the problem with this approach?

Good point - nothing is wrong with it. The only thing would be when using nn.ModuleList and you want to give a name to each module inside the list, but I agree it’s probably not worth it to implement a (possibly problematic) naming system just for this case.

Sorry but what did you mention with self ?

Can i give name like other frameworks?

nn.Conv2d(name="test_layer")

self is the parent module.

class MyNet(nn.Module):

def __init__(self):

super(MyNet, self).__init__()

self.conv1 = nn.Conv2d(...)

@smth The problem with that approach is that later (in some other routine) if you want to use the name, the ‘self.my_name’ is long gone.

If there were a name= keyword argument (like Keras supports), then it would make it easier to track, e.g., which Conv2d layer out of however many I’m using, to output weights for visualization.

Instead we get TypeError: init() got an unexpected keyword argument ‘name’

Yes, in PyTorch the name is a property of the container, not the contained layer, so if the same layer A

is part of two other layers B and C, that same layer A could have two different names in layers B and C.

This is not very helpful, I think, and I would agree that allowing layers to have identifying names which are part of the layer would be very useful.

I use a function that gives me a dict of named Conv2d, Con2dTranspose and Batchnorm2D layers.

I use it to visualize weights/gradients of my layers.

Hope this is useful:

def get_named_layers(net):

conv2d_idx = 0

convT2d_idx = 0

linear_idx = 0

batchnorm2d_idx = 0

named_layers = {}

for mod in net.modules():

if isinstance(mod, torch.nn.Conv2d):

layer_name = 'Conv2d{}_{}-{}'.format(

conv2d_idx, mod.in_channels, mod.out_channels

)

named_layers[layer_name] = mod

conv2d_idx += 1

elif isinstance(mod, torch.nn.ConvTranspose2d):

layer_name = 'ConvT2d{}_{}-{}'.format(

conv2d_idx, mod.in_channels, mod.out_channels

)

named_layers[layer_name] = mod

convT2d_idx += 1

elif isinstance(mod, torch.nn.BatchNorm2d):

layer_name = 'BatchNorm2D{}_{}'.format(

batchnorm2d_idx, mod.num_features)

named_layers[layer_name] = mod

batchnorm2d_idx += 1

elif isinstance(mod, torch.nn.Linear):

layer_name = 'Linear{}_{}-{}'.format(

linear_idx, mod.in_features, mod.out_features

)

named_layers[layer_name] = mod

linear_idx += 1

return named_layers

Couldn’t you do this?

Using nn.Sequential with an OrderedDict from collections is a good way to organized named Modules ahead of time and then construct the actual nn graph at run time.

Yes the doc says that we can use ’ add_module(name=‘layer1’, module)’ in Sequential, but what if we can add name like ‘con2d(name=‘layer1’,in_channel=10)’ when we add this layer ,and we can call this layer like ‘model_Layer1=model[‘layer1’]’ That sounds very cool

In a custom model, you can call the layer using it’s attribute name:

class MyModel(nn.Module):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = nn.Conv2d(3, 6 ,3, 1, 1)

def forward(self, x):

x = self.conv1(x)

return x

model = MyModel()

model.conv1(torch.randn(1, 3, 24, 24))

Currently, I have to define the initial

self.conv1 = nn.Conv2d(3, 24, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(24)

self.conv2 = nn.Conv2d(mid_planes, out_planes, kernel_size=1, groups=groups, bias=False)

and corresponding forward pass as

out = F.relu(self.bn1(self.conv1(x)))

out = F.conv2(out)

out = out + x

Currently, I have 2 convolutions, what happen if I want to have a flexible number of convolution layers in my network?. Let’s say 15 convolutions layers.

Is there any easy way to do it, rather than hand naming as conv1, …, conv15.

As noted above, you can create an nn.Sequential from an OrderedDict with keys as module names (conv0, conv1, …, conv14) and nn.Conv layers as the values.

However, if you want to make a more complicated forward pass with conditions on how to dispatch to the various convolutional layers, or make residual connections, etc. then your module must define that behavior.

You could mix the approaches where you construct the various layers from a collection, and then still refer to them by their name in the forward method:

class AGoodModule(nn.Module):

def __init__(self):

super(AGoodModule, self).__init__()

self.main = nn.Sequential()

layers = [

("conv{}".format(n), nn.Conv2d(4, 4, (3, 3), 1, 1)) for n in range(3)

]

[self.main.add_module(n, l) for n, l in layers]

def forward(self, x):

x0 = self.conv0(x)

x1 = self.conv1(x0) + x0

x2 = self.conv2(x1) + x1

return x2

Yes,there is ,but you must be very familiar to the convolution output_channel and input channel, there are two choice :

1: (sequential or modulelist).add written in a for loop

2: you must have a look about resnet and hourglass, they are very classics about how define a network

Thanks so much for this:grinning:

To both use a Sequential object and access a layer with a string, you could also do something like this:

Method 1

class Model(nn.Module):

def __init__(self, channels):

super(Model, self).__init__()

self.conv_block = nn.Sequential()

for k in range(len(channels)-1):

self.conv_block.add_module(

'conv{}'.format(k),

nn.Conv2d(channels[k], channels[k+1], kernel_size=3, padding=0)

)

As it defines attributes, you could access the layer using the __getattr__ function:

channels = [1, 2, 4, 8]

model = Model(channels)

model.conv_block.__getattr__('conv1')

To get the list of all names, you could either extract them from dir(model.conv_block) (by selecting the ‘conv{}’, ‘linear{}’ etc.) or create an attribute self.layer_names.

Edit: Actually this may be cleaner (I realize this is very similar to a previous answer using ModuleDict). We can easily extend it when you have several blocks:

Method 2

class Model(nn.Module):

def __init__(self, channels):

super(Model, self).__init__()

self.conv_block = nn.Sequential()

self.layer2id = dict()

self._layer_idx = self._conv_idx = 0

for k in range(len(channels)-1):

self.layer2id['conv{}'.format(self._conv_idx)] = self._layer_idx

self.conv_block.add_module(

'conv{}'.format(self._conv_idx),

nn.Conv2d(channels[k], channels[k+1], kernel_size=3, padding=0)

)

self._layer_idx += 1

self._conv_idx += 1

def get_layer(self, name):

return self.conv_block[self.layer2id[name]]

channels = [1, 2, 4, 8]

model = Model(channels)

model.get_layer('conv1')

Use nn.Sequential().add_module or OrderedDict.

# OrderedDict

self.conv5 = nn.Sequential(OrderedDict({

'conv': nn.Conv2d(out_channels[2], out_channels[3], 1),

'bn': nn.BatchNorm2d(out_channels[3]),

'relu': nn.ReLU(inplace=True),

}))

# add_module

self.conv5 = nn.Sequential()

self.conv5.add_module('conv', nn.Conv2d(out_channels[2], out_channels[3], 1))

self.conv5.add_module('bn', nn.BatchNorm2d(out_channels[3]))

self.conv5.add_module('relu', nn.ReLU(inplace=True))

Then you can use net.conv5.conv to track this conv.

A full answer to this is given in stackoferflow