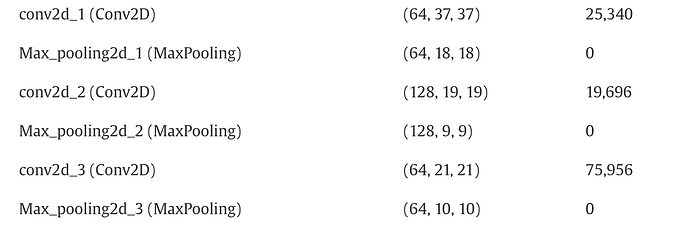

I’m trying to implement an Image classification model, but I’m having trouble understanding how to go from a lower output shape to a higher output shape. Below is a picture:

How does the author of this paper go from Maxpool2d_2 to conv2d_3? The only way that I can imagine this happening is if you use nn.ConvTranspose2d(), however this is not mentioned in the paper. Is there an alternative way of doing this step?

The paper: https://www.sciencedirect.com/science/article/pii/S0960077920308870#sec0002

It’s unclear as the authors didn’t share enough information, but they might have used a small stride and large padding.

Here is an example:

conv = nn.Conv2d(128, 64, 3, 1, padding=7)

x = torch.randn(1, 128, 9, 9)

out = conv(x)

print(out.shape)

# torch.Size([1, 64, 21, 21])

print(torch.tensor([p.nelement() for p in conv.parameters()]).sum())

# tensor(73792)

As you can see it’s just a guess and I didn’t consider any other dilation argument, which might also be different. The number of parameters also doesn’t match, so take this code with a grain of salt.