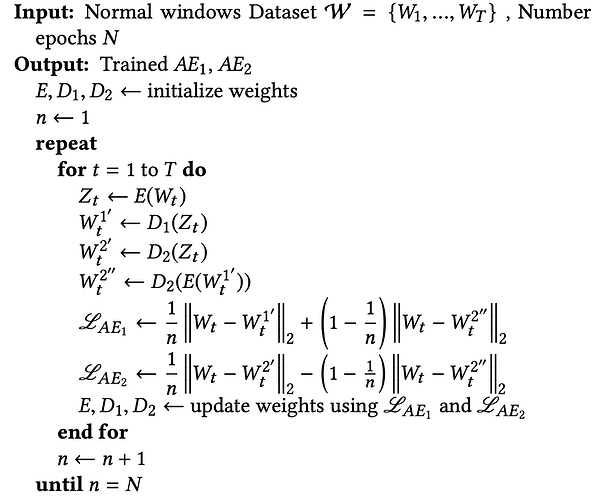

Acoording to the above firgure,how to implement to back propagation? The model include two AutoEncoders shared one encoder.How to optim AE1 and AE2 according $L_{AE1}$ and $L_{AE2}$.

I get a solution,but I feel that it was wong.

Z = self.encoder(input)

W1_prime = self.decoder1(Z)

W2_prime = self.decoder2(Z)

W2_double_prime = self.decoder2(self.encoder(W1_prime))

error1 = self.loss1(input, W1_prime, W2_double_prime, i+1)

error2 = self.loss2(input, W2_prime, W2_double_prime, i+1)

error1.backward(retain_graph=True)

error2.backward()

self.optimizer1.step() # self.optimizer1 = optim.Adam([{'params': self.encoder.parameters()}, {'params': self.decoder1.parameters()}])

self.optimizer2.step() # self.optimizer2 = optim.Adam([{'params': self.encoder.parameters()}, {'params': self.decoder2.parameters()}])

self.optimizer1.zero_grad()

self.optimizer2.zero_grad()

It seem like that Using $L_{AE1}$ and $L_{AE2}$ to optimise the first autoencoder, the two loss functions are similarly used to optimise the second autoencoder, rather than being independently optimised separately