I just learn about word embedding and I think the word vector can be learned by CBOW or Skip-gram procedure. And I have two questions about word embedding in Pytorch.

The first one–How to understand nn.Embedding in Pytorch

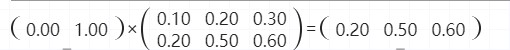

I think I don’t have a good understanding of Embedding in Pytorch. Is the nn.Embedding has the same function with nn.Linear in Pytorch. I think the nn.Embedding just like shallow fully connected network.

And, if not, how the weights of nn.Embedding are fine-tuned during the training process.

The second one–How to implement skip-gram(or CBOW) in Pytorch

The second question, I want to to know how to implement skip-gram(or CBOW) in Pytorch, are the following two networks correctly implement CBOW and Skip-gram. (The weight of nn.Embedding is the word vector)

class CBOWModeler(nn.Module):

def __init__(self, vocab_size, embedding_dim, context_size):

super(CBOWModeler, self).__init__()

self.embeddings = nn.Embedding(vocab_size, embedding_dim)

self.linear1 = nn.Linear(context_size*embedding_dim, 128)

self.linear2 = nn.Linear(128, vocab_size)

def forward(self, x):

embeds = self.embeddings(x).view(1,-1)

output = self.linear1(embeds)

output = F.relu(output)

output = self.linear2(output)

log_probs = F.log_softmax(output, dim=1)

return log_probs

class SkipgramModeler(nn.Module):

def __init__(self, vocab_size, embedding_dim, context_size):

super(SkipgramModeler, self).__init__()

self.context_size = context_size

self.embeddings = nn.Embedding(vocab_size, embedding_dim)

self.linear1 = nn.Linear(embedding_dim, 128)

self.linear2 = nn.Linear(128, context_size*vocab_size)

def forward(self,x):

embeds = self.embeddings(x).view(1,-1)

output = self.linear1(embeds)

output = F.relu(output)

output = self.linear2(output)

log_probs = F.log_softmax(output, dim=1).view(self.context_size, -1)

return log_probs

So, if I want to use embedding in nlp task, should I first train like above to obtain to weight of embedding.

Thanks for replying.

I can probably understand it now.

I can probably understand it now.