In the past in order to ensure I get the specific version of torch and cude I need (rather than the latest release at the time of install), I’ve used the following:

--find-links https://download.pytorch.org/whl/torch_stable.html

torch==1.13.1+cu117

If I upgrade this for 2.0.0, i.e.

--find-links https://download.pytorch.org/whl/torch_stable.html

torch==2.0.0+cu117

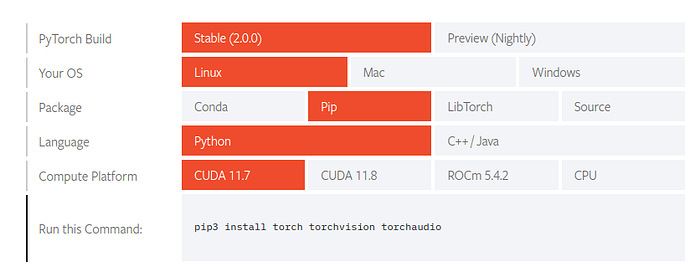

It works, but I noticed if follow the current installation instructions.

pip install torch

It’s not installing the same packages, specifically

pip install torch==2.0.0+cu117 -f https://download.pytorch.org/whl/torch_stable.html

Just installs https://download.pytorch.org/whl/cu117/torch-2.0.0 cu117-cp310-cp310-linux_x86_64.whl (and triton==2.0.0)

pip install torch==2.0.0

On the other hand installs torch-2.0.0-cp310-cp310-manylinux1_x86_64.whl and a bunch of extra packages, specifically

nvidia-nvtx-cu11

nvidia-nccl-cu11

nvidia-cusparse-cu11

nvidia-curand-cu11

nvidia-cufft-cu11

nvidia-cuda-runtime-cu11

nvidia-cuda-nvrtc-cu11

nvidia-cuda-cupti-cu11

nvidia-cublas-cu11

Does this mean I no longer have to install cuda using apt install cuda-11-7? Do these nvidia packages take care of that?

You never needed to install a local CUDA toolkit since the PyTorch binaries were shipping with their own CUDA runtime and other CUDA libs already.

The locally installed CUDA toolkit will be used if you build PyTorch from source or a custom CUDA extension.

The two workflows you’ve posted differ in their dependency resolution:

pip install torch uses the “default” pip wheel hosted on PyPI and will download the CUDA dependencies also from PyPI (seen as e.g. nvidia-cublas-cu11).pip install torch==2.0.0+cu117 -f https://download.pytorch.org/whl/torch_stable.html installs the “large” binary from the custom PyTorch download mirror (not from PyPI) and ships with all dependencies inside the wheel (which is also why their size is large).

1 Like

Interesting - thanks.

When you say never needed to, I wasn’t aware of that. But I’m fairly sure that even when you install the “large” binary, it uses the system cuda if it finds it? The reason I say this is by installing the C++ cuda runtime and adding to my .bashrc

export LIBTORCH=/usr/local/libtorch

export LD_LIBRARY_PATH=${LIBTORCH}/lib::$LD_LIBRARY_PATH

Allows me to run tch-rs (rust C++ bindings to libtorch) but doing so broke a python virtualenv containing torch==1.13.1+cu117 (since it seemed to be loading torch 2.0 libs from libtorch) and removing the exports fixed it again. Same thing happens if I use update alternatives to switch between system CUDA versions.

Poking around a venv I created, I see the nvidia libs are installed in

.venv/lib64/python3.10/site-packages/nvidia/*/lib/*

But I’m not sure they’re in my LD_LIBRARY_PATH (unless activating a venv modifies it) but I am sure system CUDA is.

Is there a “best practice” to ensure that the correct .so’s are loaded? I typically have a venv per project, at the moment I’ve managed to keep all projects using the same version of cuda (1.11.7) but it would be nice to isolate each one.

I’m not familiar with tch-rs and don’t know what kind of dependencies are used. However, to build a local libtorch application your local CUDA and C++ toolchains would be used, so it could be related.

The wheels should force the usage of the (legacy) RATH to point to the wheel dependencies, but in the past system libs could be preloaded since the RUNPATH was specified.

As a user you shouldn’t care about your system-wise installed libs as the binaries should use their own dependencies, unless you want to build PyTorch or any extension from source.

1 Like

Please let me know if you see any issues where the CUDA libs packaged in the binaries (coming from PyPI or directly shipped inside the wheel etc.) are not working as expected and you see some mismatches.

Also, let me know if my assumption about tch-rs is correct and it indeed needs to build libtorch applications in which case your locally installed CUDA toolkit should be used.

It seems to be working for torch 2.0.0. I’m unfamiliar with RPATH, but I removed the cuda exports from my .bashrc, and torch still worked fine. I might dig into it a bit further to see exactly what libraries are being loaded.

When I get time, I’ll try torch 1.13.1 and see if that works.

# for tch-rs

#export LIBTORCH=/usr/local/libtorch

#export LD_LIBRARY_PATH=${LIBTORCH}/lib::$LD_LIBRARY_PATH

# no longer required for torch

#export CUDA_HOME=/usr/local/cuda

#export PATH=${CUDA_HOME}/bin:${PATH}

#export LD_LIBRARY_PATH=${CUDA_HOME}/lib64:$LD_LIBRARY_PATH

# Protects agains accidental package install in system python

export PIP_REQUIRE_VIRTUALENV=true

Yes you’re correct about tch-rs - it requires libtorch.