@eqy Thank for the advice. I did some tests again with torch.cuda.set_per_process_memory_fraction.

I run the segmentation model inference on two GPUS: a 4G memory GPU and a 8G memory GPU. And I set different fractions as you told to test.

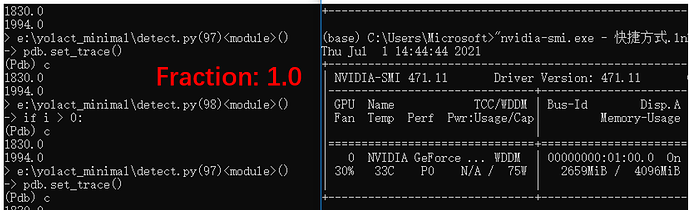

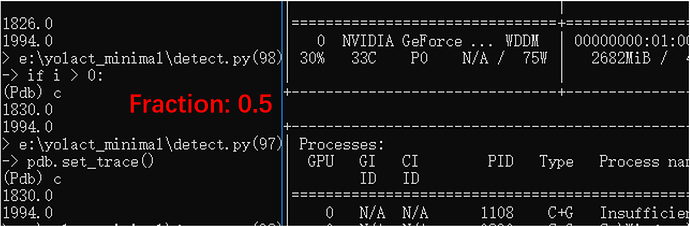

The 4G GPU:

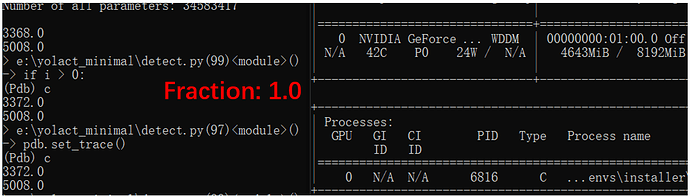

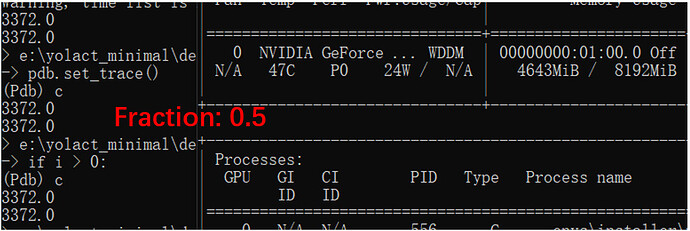

The 8G GPU:

Both the two GPUs encountered “cuda out of memory” when the fraction <= 0.4.

This is still strange. For fraction=0.4 with the 8G GPU, it’s 3.2G and the model can not run. But for fraction between 0.5 and 0.8 with the 4G GPU, which memory is lower than 3.2G, the model still can run. And seems

torch.cuda.set_per_process_memory_fraction can only limit the pytorch reserved memory. The reserved memory is 3372MB for 8G GPU with fraction 0.5, but nvidia-smi still shows 4643 MB. Some memory did not return to the OS.