Hello,

I am running a Jupyter Notebook with a LLM. When evaluating the model with the dataloader, it throws an error of CUDA out of memory.

I was investigating and try to do the eval by batches and liberate memory on the process.

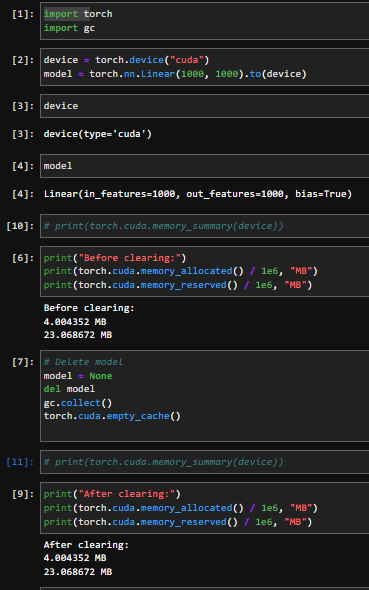

However, I notice that the server is not releasing the memory of CUDA even after calling

gc.collect() or torch.cuda.empty_cache()

I made a toy example to illustrate this:

Also, when re-running the notebook, it allocates more memory instead of overwriting it.

To ensure the CUDA Memory is liberate it i need to turn off the kernel, but i don’t want to do that.

How can i solve this issue? Is there a problem with my CUDA installation or PyTorch?

Why the torch.cuda.empty_cache() is not liberating memory?