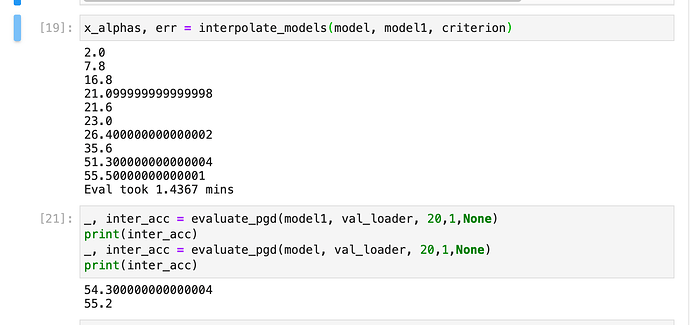

Hi I’m trying to linearly interpolate between two models but it doesn’t seem to be working. Here is my function to do the interpolation. I have the output attached below.

`def interpolate_models(model, model1):

alphas = np.linspace(0,1,10)

acc_array = np.zeros(alphas.shape)

iter = 0

tic = time.perf_counter()

for alpha in alphas:

new_model = copy.deepcopy(model)

new_model_1 = copy.deepcopy(model1)

for n, p in new_model.named_parameters():

p.data = (alpha) * p.data + (1 - alpha) * {n:p for n, p in new_model_1.named_parameters()}[n].data

_, inter_acc = evaluate_pgd(new_model, val_loader, 20,1,None)

print(inter_acc)

acc_array[iter] = 100 - inter_acc

iter = iter + 1

del new_model

del new_model_1

toc = time.perf_counter()

print(f"Eval took {(toc - tic)/60:0.4f} mins")

return alphas, acc_array`

Clearly, the accuracy for model is about what I would expect but not for model1. Is my interpolation function totally wrong or is pytorch doing something weird to the weights under the hood?

Thanks in advance.