I’m doing research on model pruning.

Filter pruning.(Kernel pruning)

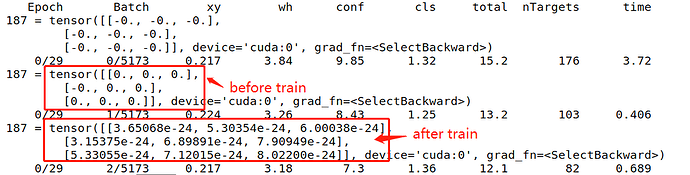

I set the value of some filters to zero

then retrain model. But these values become non-zero in the process of Back Propagation.

What can I do to solve this problem? (Keep these filters at 0 during retraining)

Thanks for your help~~