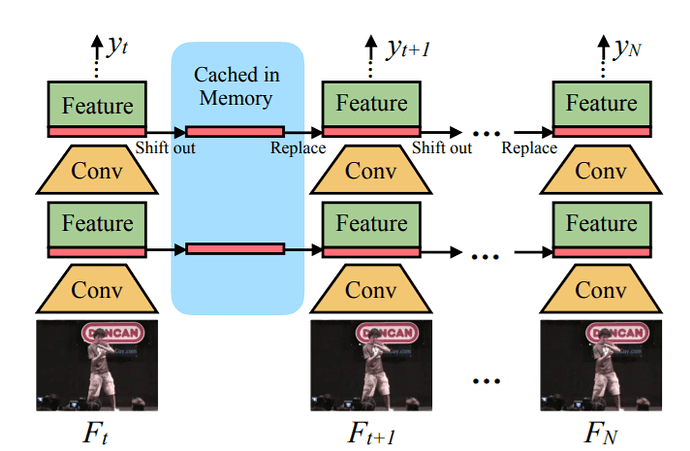

I am trying to implement the online algorithm of this paper, which is on video classification. This work moves 1/8 of channel feature maps from each image, into the next image, after each convolution operation. The image of the operation has been attached here -

While trying to implement the same, I have succeeded in extracting out the first 1/8 channel feature maps, but I don’t know how to add them to the succeeding image. My code has been attached below -

import cv2

import gym

import numpy as np

import matplotlib.pyplot as plt

import torch

import torch.nn as nn

import torch.optim as optim

import torch.autograd as autograd

import torch.nn.functional as F

N = 1 # Batch Size

T = 5 # Time Steps. This means that there are 5 frames in the video

C = 3 # RGB Channels

H = 144 # Height

W = 144 # Width

foo = torch.randn(N*T, C, H, W)

print("Shape of foo = ", foo.shape)

#torch.Size([5, 3, 144, 144])

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1 = nn.Conv2d(3, 8, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

def forward(self, x):

x = F.relu(self.conv1(x))

print("Shape of x = ", x.shape)

# torch.Size([5, 8, 140, 140])

shape_extract = x[:, :1,:,:]

print("Shape of extract = ", shape_extract.shape)

# torch.Size([5, 1, 140, 140])

# 1/8 of the channels have been extracted out from above. But how do I transfer these channel features to the next image?

return x

net = Net()

output = net(foo)