mhwu

September 28, 2017, 3:52am

1

In the Inception model, in addition to final softmax classifier, there are a few auxiliary classifiers to overcome the vanishing gradient problem.

My question is

How can I compute the loss with all the classifiers? Can anyone give me an example?

output, aux_output = models.inception_v3(input)

loss = criterion(output, aux_output, target_var)

loss.backward()

optimizer.step()

or

output, aux_output = models.inception_v3(input)

loss1 = criterion(output, target_var)

loss2 = criterion(aux_output, target_var)

loss = loss1 + loss2

loss.backward()

optimizer.step()

2 Likes

smth

September 28, 2017, 3:56am

2

your second code snippet is the way to go.

2 Likes

smth

September 28, 2017, 3:57am

3

usually one of the losses is weighted lower. So you can do:

loss = loss1 + 0.4 * loss2 # 0.4 is weight for auxillary classifier

5 Likes

mhwu

September 28, 2017, 3:59am

4

thank you soooooo much!!!

micklexqg

September 28, 2017, 7:22am

5

thanks for giving the loss. but how to get the final predict with the two output(output and aux_output)? I need to compute the accuracy.

2 Likes

mhwu

September 28, 2017, 7:42am

6

the loss of auxiliary classifiers is used to overcome the vanishing gradient problem and it has nothing to do with prediction. the prediction is made by the final classifier.

micklexqg

September 28, 2017, 7:52am

7

but how to get the prediction made by the final classfier, is it ‘output, aux_output = models.inception_v3(input)’ as you stated in the above.

micklexqg

September 28, 2017, 8:28am

8

any idea for getting the prediction?

mhwu

October 1, 2017, 3:02am

9

def forward(self, x):

...

return(output0, output1, output2)

output0, output1, output2 = model(inputs)

_, preds = torch.max(output2.data, 1)

loss0 = criterion(output0, labels)

loss1 = criterion(output1, labels)

loss2 = criterion(output2, labels)

loss = loss2 + 0.3 * loss0 + 0.3 * loss1

loss.backward()

optimizer.step()

output2 is the prediction of the final classifier, and the other two outputs are the ones of auxiliary classifiers. The overall

As you can see, I made predictions through the output2 .

“At inference time, these auxiliary networks are discarded.” as the paper said.

1 Like

micklexqg

October 6, 2017, 12:03pm

10

helpful code and hint, thank you!

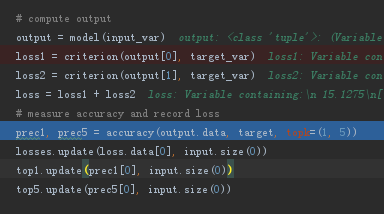

@micklexqg : I followed this, added to the imagenet example script to run on my own data over googlenet (from vision/models/googlenet.py).

Imagenet script organization:

def main()

def main_worker()

def train()

outputs, aux_outputs = model(inputs)

loss1 = criterion(outputs, target)

loss2 = criterion(aux_outputs, target)

loss = loss1 + 0.4*loss2

def validate()

outputs = model(inputs)

loss = criterion(outputs, target)

def adjust_learning_rate()

def accuracy()

Then the execution throws this error:

Traceback (most recent call last):

File "imagenet.py", line 406, in <module>

main()

File "imagenet.py", line 113, in main

main_worker(args.gpu, ngpus_per_node, args)

File "imagenet.py", line 239, in main_worker

train(train_loader, model, criterion, optimizer, epoch, args)

File "imagenet.py", line 279, in train

output, aux_outputs = model(input)

ValueError: too many values to unpack (expected 2)

any idea?

ztleap

March 27, 2019, 5:10pm

12

if model() is GoogleNet.forward(), you need to make sure GoogleNet.aux_logits=True. Otherwise it would just return output, rather than (output, aux_outputs). Giving you that error.

1 Like

I figured out that, GooLeNet has two auxoutputs, and worked by these modifications. So,

aux1, aux2, outputs = model(inputs)

loss1 = criterion(aux1, target)

loss2 = criterion(aux2, target)

loss3 = criterion(outputs, target)

loss = loss3 + 0.3 * (loss1+loss2)