I am trying to do sequence classification by first passing data to RNN and then to Linear, normally I would just reshape the output from [batch_size, sequence_size, hidden_size] to [batch_size, sequence_size*hidden_size] to pass it to Linear, but in this case I have sequence of varying lengths, so the output of RNN might be for example [batch_size, 32, hidden_size] or [batch_size, 29, hidden_size], so I don’t know with what shape to initialize the Linear layer (in place of question marks in the code below). Is it at all possible?

class RNN(nn.Module):

def __init__(self, input_size, hidden_size, num_classes=4):

super().__init__()

self.rnn = nn.RNN(input_size, hidden_size, batch_first=True)

self.fc = nn.Linear(hidden_size*????, num_classes)

def forward(self, x):

#x=[batch_size, sequence_length]

out, h_n = self.rnn(x) #out=[batch_size, sequence_length, hidden_size]

out = torch.reshape(out, (BATCH_SIZE, -1)) #out=[batch_size, sequence_length*hidden_size]

out = self.fc(out) #out=[batch_size, num_classes]

return x

```

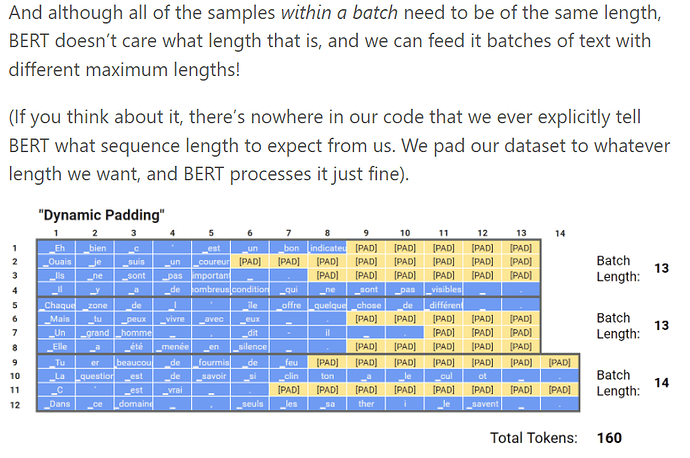

Currently each batch is padded to the longest sequence in the batch, is it better to just pad all the sequences to the same length to get rid of this problem? Is changing shape of input to Linear causing some bad side effects?