Hi All,

I am a beginner of pytorch, I tried to print memory cost (or variable shape/size) of each layer, not only the model._params and model._buffers, but also some mid-variable in model.backward(), how can I do it?

Thanks

^bump^

I would also like to know the answer.

Specifically, how to get the memory footprint of a Tensor? sys.getsizeof() gives size of the metadata of the Tensor. I would like to know how much Memory is it actually occupying.

Thanks

Any updates on this? Did you find a way to do that @anand.saha ?

Nope, @EKami. Had moved on to other things …

okay np

If you use master instead of 0.3.1, there is torch.cuda.memory_allocated(). This gives you all the allocated cuda memory, so you can instrument your code with it. I found it very helpful.

That’s perfect thanks a lot!

Hello,

Anyone knows if there is any equivalent for CPU execution?

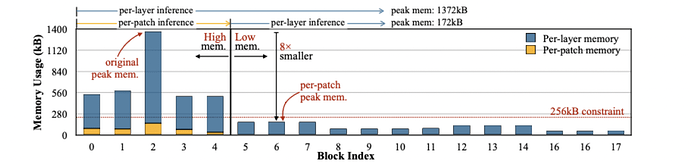

I came across this picture in this great paper and I would like to replicate

It could be done by simply printing sys.getsizeof() after each layer, right?

Have you guys looked at the profiler?

https://pytorch.org/tutorials/recipes/recipes/profiler_recipe.html