Hi,

I know PyTorch layers expects an additional dimension for batch.

I was wondering how to extend this to a generic nn.Module (with no assumption about what are there inside), since applying a for loop over the batch duplicates the network (generating a siamese one).

Thanks in advance

1 Like

I don’t quite understand your use case.

You usually don’t see the batch dimension as it’s used in the computations in the module.

So usually you won’t find any for loops over the batch dim. Could you post an example of what you are trying to achieve?

Hi there,

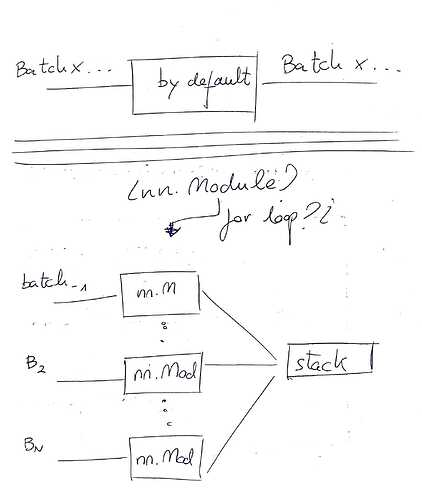

The fact is that only by-default layers are ready to have a batch as input. When you process data with one of those layers the graph is a single-input /single-output graph and the only thing it changes is the dimensionality (image 2)

However, with a custom nn.Module you cannot handle the problem like that.

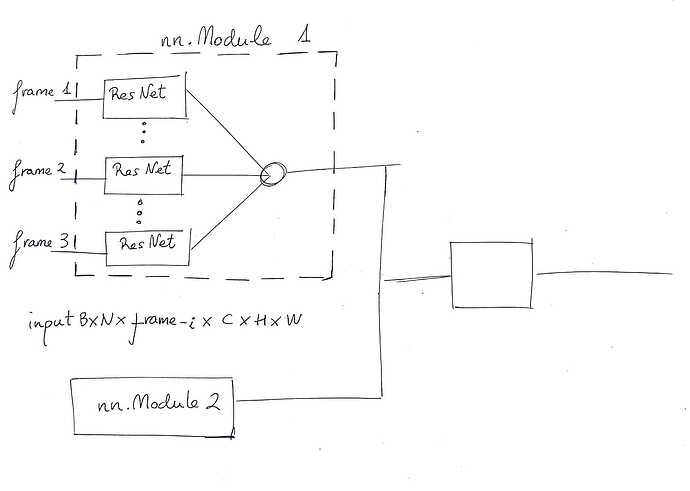

In my case I have to process videos (audio + sequence of frames)

If I want to create a nn.Module which generates a siamese network to process a video (as sequence of frames) of dimension batch x frame_i x channels x H x W. How can perform in the simplest way that operation?

Because iterating over the batch dimension inside/outside the nn.Module would replicate the branch N times, whereas by-default layers does not replicate the batch but process the whole batch

So using a for loop the efect is the case 2 meanwhile by-default layers generate the graph of case 1.

Being able to use nn.Modules as by-default layers seems natural for me (in order to scale the problem to more inputs and more nn.Modules), but here it looks like i have to use batches of frames as input, but at the time of creating bigger modules it can be a nightmare