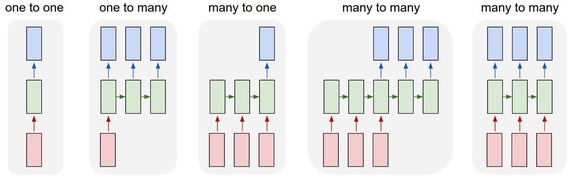

How to realize the last “many to many” with LSTM by pytorch?

Can you give me a example, thanks!

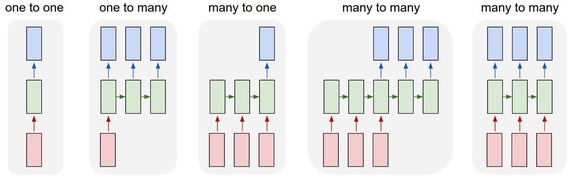

How to realize the last “many to many” with LSTM by pytorch?

Can you give me a example, thanks!

Any kind of continued sequence prediction counts as many-to-many - common examples are language models (generating a sequence of characters/words based on previous sequence) and time series prediction (predicting e.g. future stock price, seismic data based on previous history)

Thank you.Mainly, a few days ago, I found the net gradient was not changed when training, but now I know how to do. Thanks!

Could you provide a example of how to solve the problem you proposed?