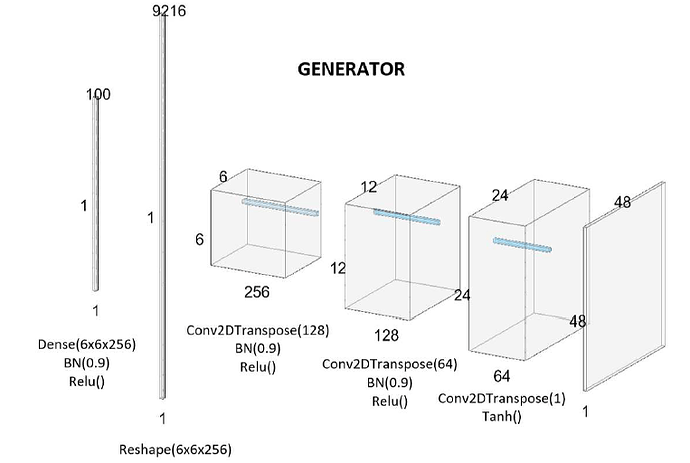

Hi, I’m implementing Generator of a GAN and I need to reshape output of Linear Layer to particular dimension, here is the image of the Generator.

Here is my code of Generator:

class Generator(nn.Module):

def __init__(self, z_dim=100, im_chan=1, hidden_dim=64, rdim=9216):

super(Generator, self).__init__()

self.z_dim = z_dim

self.gen = nn.Sequential(

nn.Linear(z_dim, rdim),

nn.BatchNorm2d(rdim,momentum=0.9),

nn.ReLU(inplace=True),

----> torch.reshape(rdim, (6,6,256)),

self.make_gen_block(rdim, hidden_dim*2),

self.make_gen_block(hidden_dim*2,hidden_dim),

self.make_gen_block(hidden_dim,im_chan,final_layer=True),

)

def make_gen_block(self, input_channels, output_channels, kernel_size=1, stride=2, final_layer=False):

if not final_layer:

return nn.Sequential(

nn.ConvTranspose2d(input_channels, output_channels, kernel_size, stride),

nn.BatchNorm2d(output_channels),

nn.ReLU(inplace=True)

)

else:

return nn.Sequential(

nn.ConvTranspose2d(input_channels, output_channels, kernel_size, stride),

nn.Tanh()

)

def unsqueeze_noise(self, noise):

return noise.view(len(noise), self.zdim, 1, 1)

def forward(self, noise):

x = self.unsqueeze_noise(noise)

return self.gen(x)

** The problem is when I try to reshape the output of Linear layer after BatchNorm and ReLU (in fig. Dense as they have used Tensorflow) it is throwing error as :TypeError: reshape(): argument ‘input’ (position 1) must be Tensor, not int**

torch.reshape is not looking good to me from start but nn does not have reshape in it so used torch explicitly. Can somebody help me to get through this? Thanks.