I’m creating a torchvision.datasets.ImageFolder() data loader, adding torchvision.transforms steps for preprocessing each image inside my training/validation datasets.

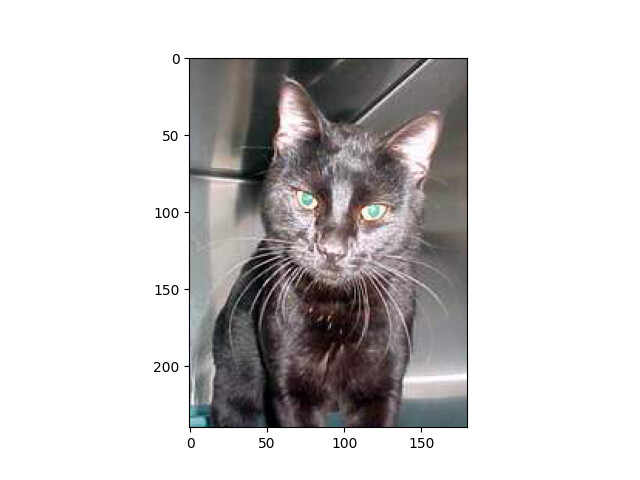

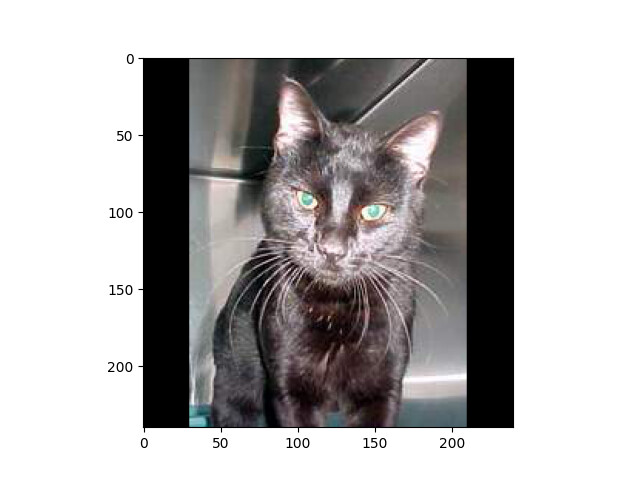

My main issue is that each image from training/validation has a different size (i.e.: 224x400, 150x300, 300x150, 224x224 etc). Since the classification model I’m training is very sensitive to the shape of the object in the image, I can’t make a simple torchvision.transforms.Resize(), I need to use padding to maintain the proportion of the objects.

Is there a simple way to add a padding step into a torchvision.transforms.Compose() pipeline (ensuring that every image is 224x224, without cropping the image, only doing a resize and padding)? *each image has a different original shape/size

data_transforms = {

'train': transforms.Compose([

transforms.Resize((224,224)),

transforms.Grayscale(num_output_channels=3),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

'val': transforms.Compose([

transforms.Resize((224,224)),

transforms.Grayscale(num_output_channels=3),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])

]),

}

data_dir = "./my_data_dir/"

image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),

data_transforms[x])

for x in ['train', 'val']}

dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=16,

shuffle=True, num_workers=8)

for x in ['train', 'val']}

Reading the torchvision.transforms.Pad() documentation, I’ve understood that I need to know the size of the padding beforehand, and know if it will be applied on left/right or top/bottom, before using this transform.

Is there a simple way to add a step on this transforms.Compose() to infer the image size, so I can get the parameters that I need to configure my torchvision.transforms.Pad()?