Given a convolutional neural network:

class ConvNet(nn.Module):

def __init__(self, num_classes):

self.numClasses = num_classes

super(ChessConvNet, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(1, 12, kernel_size=5, stride=1, padding=2), # 1, 64

nn.BatchNorm2d(12),

nn.ReLU())

self.layer2 = nn.Sequential(

nn.Conv2d(12, 12, kernel_size=5, stride=1, padding=2), # 64, 12

nn.BatchNorm2d(12), # 12

nn.ReLU())

self.layer3 = nn.Sequential(

nn.Conv2d(12, 12, kernel_size=3, stride=1, padding=1), # 12, 12

nn.BatchNorm2d(12),

nn.ReLU())

self.layer4 = nn.Sequential(

nn.Conv2d(12, 12, kernel_size=3, stride=1, padding=1), # 12, 12

nn.BatchNorm2d(12),

nn.ReLU())

self.fc = nn.Linear(896 * 12, num_classes)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out = self.layer3(out)

out = self.layer4(out)

out = out.reshape(out.size(0), -1)

out = self.fc(out)

return out

Is there any way to have the neural network output values from 0 to 1 as a result of the forward function? I have tried a few of the following:

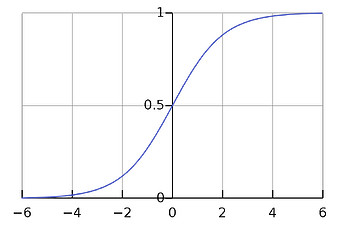

- added a sigmoid activation to the final layer (nn.Sigmoid(out)), but this does not solve the problem, as the network is unable to train under this circumstance.

- I have changed the neural network to train on output values scaled by a logit function, then adding a sigmoid activation function once I get the required output values. Again, the network is unable to train under this circumstance.

[additional information:

Training uses PoissonNLLLoss and can accurately classify objects. However, the network needs to output probabilities between 0 and 1 (instead of the current range from ~ -60 - 3). The probabilities of each class in the output array are independent to each other, so a softmax layer will not work.

An example output by the NN at the moment:

[2.4, -53.12, 0.53, -3.59]

what an output should look like:

[0.32, 0.00, 0.58, 0.92]

]

Thank you!