Hi,

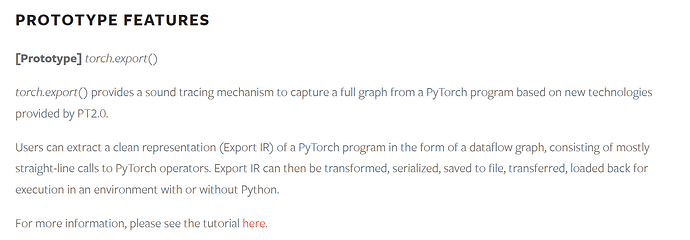

Despite the main points in the torch.compile pitch, we faced some issues with jit, but they were tolerable, and we adopted torch.jit.save and torch packages as a model serialization / obfuscation / freezing methods (and ONNX as well).

It may be seen as a disadvantage, but sharing single .jit or .package artefacts may be preferrable to sharing the whole model codebase and then running torch.compile.

So, I have a few general questions, which I believe were not yet clearly anwered in the docs / blog / release materials:

- What is the preferred serialization format for

torch.compile-ed models? - How does JIT compiler,

.jitformat, torch packages play with compiled models? - Should I first compile the model, then export to

jitorpackage, or vice versa, or am I getting it wrong altogether?

Or maybe it is still early to ask these questions? Maybe there are some discussions? Found this, but it seems not very informative.

PS

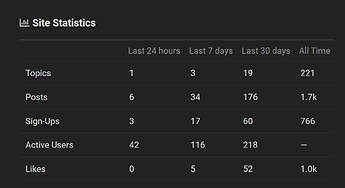

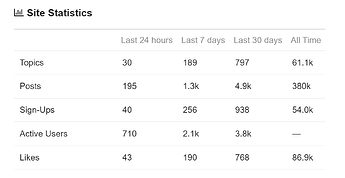

Also forgive me for a dumb question, but why there are now 2 official PyTorch forums, this one, and dev-discuss.pytorch.org, the latter being ~100x smaller?