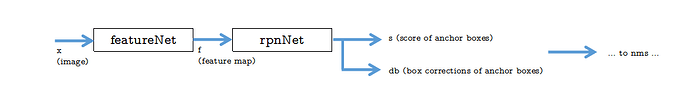

I am trying to code faster-rcnn from scratch in pytorch. The diagram and code show my construction.

How can I input the parameter list from both ‘featureNet and rpnNet’ for my optimizer?

Is this correct?

params = list(feature_net.parameters()) + list(rpn_net.parameters())

optimizer = optim.SGD(params, lr=0.01, momentum=0.9, weight_decay=0.0005)

def run_train():

channel = 3

height, width = 512, 512 #largest size

num_features = 16

feature_net = featureNet((channel, height, width), num_features)

rpn_net = RpnNet(num_features, num_bases)

#learning hyper parameters

num_iter=100

params = list(feature_net.parameters()) + list(rpn_net.parameters())

optimizer = optim.SGD(params, lr=0.01, momentum=0.9, weight_decay=0.0005)

#training --------------------------------------------

feature_net.train()

rpn_net.train()

for it in range(num_iter):

x, annotation = get_train_data()

# forward

f = feature_net(x)

rpn_s,rpn_db = rpn_net(f)

# backward

rpn_label, rpn_bbox_target = rpn_target_layer(annotation) #generate ground truth

rpn_loss_score = F.cross_entropy (rpn_s, rpn_label)

rpn_loss_dbox = F.smooth_l1_loss(rpn_db, rpn_bbox_target)

loss = rpn_loss_label + rpn_loss_box

# update

optimizer.zero_grad()

loss.backward()

optimizer.step()

# print and show results

# ...