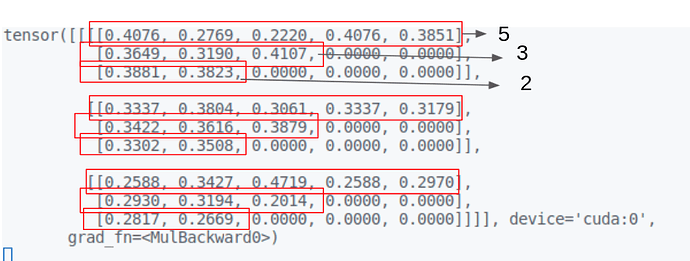

for example, I have a target tensor like this,

and I have a length tensor [5,3,2],

if I directly flatten the tensor on dimension 2, the shape will be [1,3,15]

How to flatten the tensor based on the length of dimension 3 so that the result can be [1,3,10] where 10 = 5+3+2?

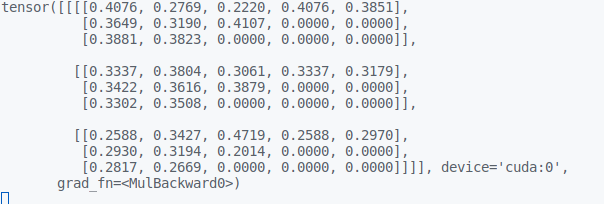

The desired output will be:

tensor([[[0.4076, 0.2769, 0.2220, 0.4076, 0.3851, 0.3649, 0.3190, 0.4107,

0.3881, 0.3823, ],

[0.3337, 0.3804, 0.3061, 0.3337, 0.3179, 0.3422, 0.3616, 0.3879,

0.3302, 0.3508, ],

[0.2588, 0.3427, 0.4719, 0.2588, 0.2970, 0.2930, 0.3194, 0.2014,

0.2817, 0.2669, ]]],

device=‘cuda:0’, grad_fn=)

Thanks!