def img_convert(tensor):

image = tensor.clone().detach().numpy()

image = image.transpose(1, 2, 0)

print(image.shape)

image = image*np.array(0.5,) + np.array(0.5,)

image = image.clip(0, 1)

return image

dataiter = iter(training_loader)

images, labels = dataiter.next()

fig = plt.figure(figsize=(25, 4))

for idx in np.arange(20):

ax = fig.add_subplot(2, 10, idx+1, xticks=[], yticks=[])

plt.imshow(img_convert(images[idx]))

ax.set_title([labels[idx].item()])

Can someone help me with this error can’t figure out how to resolve this, first I had gray scale problem then after fixing it there is a new issue.

Invalid dimension for image data

Could you print the shape of images[idx] and the returned numpy array from img_convert?

PS: You can add code snippets using three backticks ```

1 Like

On printing the shape of images[idx] I find the shape is reversed

Output:

torch.Size([1, 28, 28])

(28, 28, 1)

However if I put a transpose command

images[idx].transpose(1, 2, 0)

so which 2 positional arguments should I give

If you are dealing with grayscale images, you should remove the channel dimension for matplotlib:

plt.imshow(np.random.randn(24, 24, 3)) # works

plt.imshow(np.random.randn(24, 24)) # works

plt.imshow(np.random.randn(24, 24, 1)) # fails

1 Like

So what do you suggest I should do in my above mentioned code

Where should I remove the channel dimensions in the img_conver method or in the below for loop

I would add something like:

if image.shape[2] == 1:

image = image[:, :, 0]

return image

into your img_convert method.

1 Like

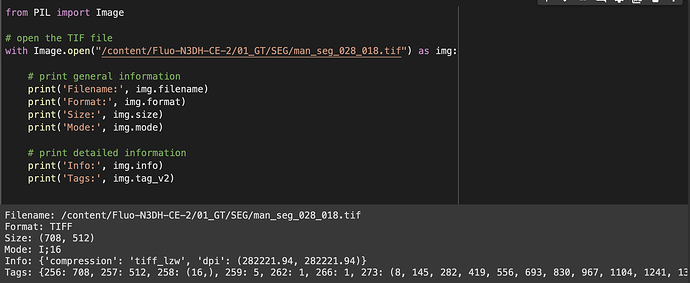

@ptrblck I was having a Tiff file dataset and the problem am having is ,couldn’t plot the tiff file and these are the following information about the tiff file.

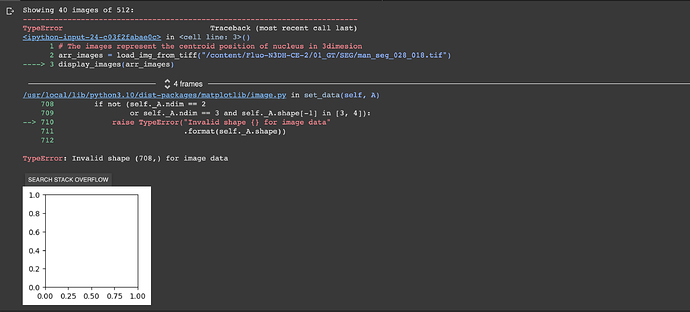

and this is the following error, could know where is the problem.

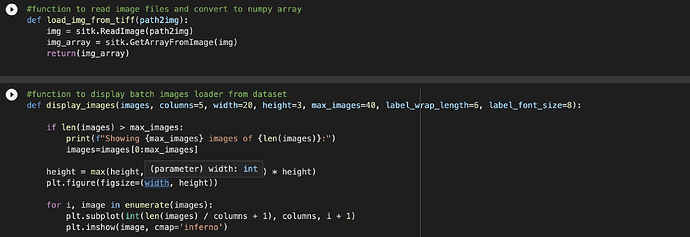

and these are the following functions to convert from tiff to image array and display the tiff file

here is the following link to colab Notebook

plss tell me , how to resolve this problem

I guess you are slicing the image in diaplay_images or are iterating each row later. Try to load the image once manually and display it directly without any additional logic to plot multiple images etc.