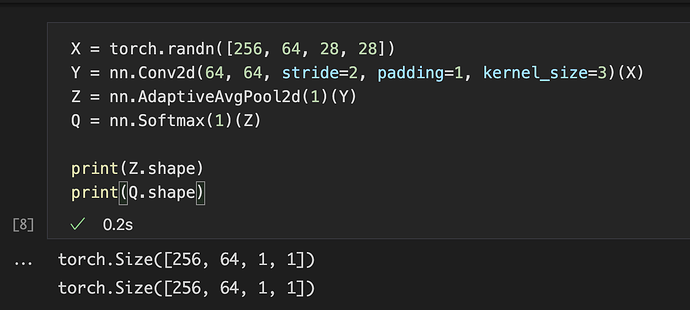

Is this code correct or what arguments should I pass to the adaptiveavgpool2D and softmax? I suspect it is wrong since it is giving me lower than expected accuracy

self.layer_attend1 = nn.Sequential(nn.Conv2d(64, 64, stride=2, padding=1, kernel_size=3),

nn.AdaptiveAvgPool2d(1),

nn.Softmax(1))

Mainly I think the args to nn.Softmax is wrong here. Given the code is running and throws no error I find it hard to debug.