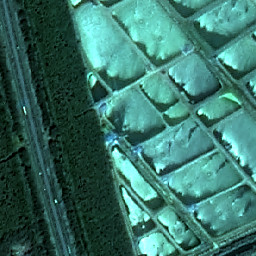

I’ve been studying remote sensing and semantic segmentation recently.I download a open dataset about remote sensing LULC (WHDLD),it’s (3 256 256) size. And it have two folder,one is image and anther is mask image.I have load the data in the Dataloader follow this https://discuss.pytorch.org/t/how-make-customised-dataset-for-semantic-segmentation/30881/13.And i also get a SegNet model from github. But i don’t know how should i start training?

And i put my colab code link in herehttps://colab.research.google.com/drive/1Rp6mjYTWQTKkIXY32iT_cSX5eS_PJx5Z?usp=sharing.

Based on the error in your notebook it seems the input is not a batch of images, but a single image without the batch dimension.

This issue is most likely created since you are iterating the Dataset instead of the DataLoader:

for inputs, labels in dataloaders[phase].dataset:

Change it to:

for inputs, labels in dataloaders[phase]:

and it should work, since the DataLoader will automatically create the batches.

Thank you help, i have change it. But a new error is thrown.

RuntimeError: 1D target tensor expected, multi-target not supported

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-20-cc88ea5f8bd3> in <module>()

1 model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler,

----> 2 num_epochs=25)

4 frames

<ipython-input-19-c34fb749b111> in train_model(model, criterion, optimizer, scheduler, num_epochs)

35 print(outputs)

36 _, preds = torch.max(outputs, 1)

---> 37 loss = criterion(outputs, labels)

38

39 # backward + optimize only if in training phase

Is it because my loss function is set as a cross entropy loss function, and the cross entropy loss function does not apply to my dataset?

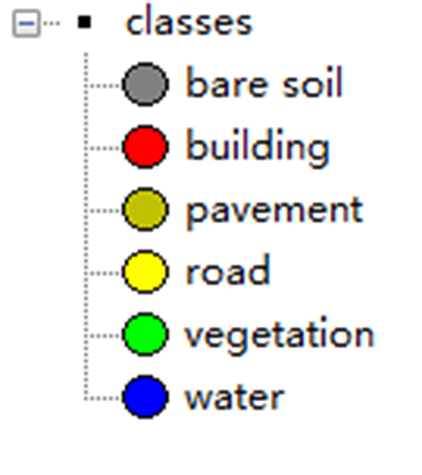

My dataset have 6 class.

Could you check the shape of labels?

nn.CrossEntropyLoss can be used for a multi-class classification or segmentation and expects the targets to have the shape [batch_size] for the former and [batch_size, height, width] for the latter case.

The targets should also contain the class indices in the range [0, nb_classes-1].

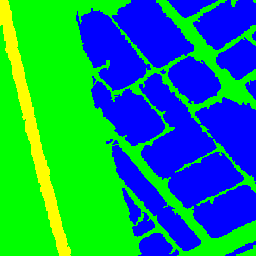

Based on the error message and your example pictures, I guess you might pass the target as a one-hot encoded mask in the shape [batch_size, nb_classes, height, width].

If that’s the case, use labels = torch.argmax(labels, dim=1) to create the expected target tensor.

It’s my size of outputs and labels.

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

#print(outputs.size())

#print(labels.size())

labels = torch.argmax(labels, dim=1)

#print(labels.size())

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

torch.Size([4, 6])

torch.Size([4, 256, 256])

use

labels = torch.argmax(labels,dim=1)

but can’t create the 1D target tensor.

Your model output of [4, 6] seems to be wrong for a segmentation use case, as it should be [batch_size, nb_classes, height, width].

Currently it seems your model outputs a single label for each input sample, so you might want to change the model architecture.

okay,i will try. Thank you.

Hello @ptrblck , i changed my model, now the size of output is [batch_size, nb_classes, height, width] [4, 6, 256, 256],and size of labels is [batch_size, height, width] [4, 256, 256].But it will report a error

IndexError: Target 6 is out of bounds.

It’s my model architecture,

import torch

import torch.nn as nn

import torch.nn.functional as F

from collections import OrderedDict

class SegNet(nn.Module):

def __init__(self,input_nbr,label_nbr):

super(SegNet, self).__init__()

batchNorm_momentum = 0.1

self.conv11 = nn.Conv2d(input_nbr, 64, kernel_size=3, padding=1)

self.bn11 = nn.BatchNorm2d(64, momentum= batchNorm_momentum)

self.conv12 = nn.Conv2d(64, 64, kernel_size=3, padding=1)

self.bn12 = nn.BatchNorm2d(64, momentum= batchNorm_momentum)

self.conv21 = nn.Conv2d(64, 128, kernel_size=3, padding=1)

self.bn21 = nn.BatchNorm2d(128, momentum= batchNorm_momentum)

self.conv22 = nn.Conv2d(128, 128, kernel_size=3, padding=1)

self.bn22 = nn.BatchNorm2d(128, momentum= batchNorm_momentum)

self.conv31 = nn.Conv2d(128, 256, kernel_size=3, padding=1)

self.bn31 = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv32 = nn.Conv2d(256, 256, kernel_size=3, padding=1)

self.bn32 = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv33 = nn.Conv2d(256, 256, kernel_size=3, padding=1)

self.bn33 = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv41 = nn.Conv2d(256, 512, kernel_size=3, padding=1)

self.bn41 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv42 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn42 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv43 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn43 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv51 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn51 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv52 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn52 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv53 = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn53 = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv53d = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn53d = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv52d = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn52d = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv51d = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn51d = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv43d = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn43d = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv42d = nn.Conv2d(512, 512, kernel_size=3, padding=1)

self.bn42d = nn.BatchNorm2d(512, momentum= batchNorm_momentum)

self.conv41d = nn.Conv2d(512, 256, kernel_size=3, padding=1)

self.bn41d = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv33d = nn.Conv2d(256, 256, kernel_size=3, padding=1)

self.bn33d = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv32d = nn.Conv2d(256, 256, kernel_size=3, padding=1)

self.bn32d = nn.BatchNorm2d(256, momentum= batchNorm_momentum)

self.conv31d = nn.Conv2d(256, 128, kernel_size=3, padding=1)

self.bn31d = nn.BatchNorm2d(128, momentum= batchNorm_momentum)

self.conv22d = nn.Conv2d(128, 128, kernel_size=3, padding=1)

self.bn22d = nn.BatchNorm2d(128, momentum= batchNorm_momentum)

self.conv21d = nn.Conv2d(128, 64, kernel_size=3, padding=1)

self.bn21d = nn.BatchNorm2d(64, momentum= batchNorm_momentum)

self.conv12d = nn.Conv2d(64, 64, kernel_size=3, padding=1)

self.bn12d = nn.BatchNorm2d(64, momentum= batchNorm_momentum)

self.conv11d = nn.Conv2d(64, label_nbr, kernel_size=3, padding=1)

def forward(self, x):

# Stage 1

x11 = F.relu(self.bn11(self.conv11(x)))

x12 = F.relu(self.bn12(self.conv12(x11)))

x1p, id1 = F.max_pool2d(x12,kernel_size=2, stride=2,return_indices=True)

# Stage 2

x21 = F.relu(self.bn21(self.conv21(x1p)))

x22 = F.relu(self.bn22(self.conv22(x21)))

x2p, id2 = F.max_pool2d(x22,kernel_size=2, stride=2,return_indices=True)

# Stage 3

x31 = F.relu(self.bn31(self.conv31(x2p)))

x32 = F.relu(self.bn32(self.conv32(x31)))

x33 = F.relu(self.bn33(self.conv33(x32)))

x3p, id3 = F.max_pool2d(x33,kernel_size=2, stride=2,return_indices=True)

# Stage 4

x41 = F.relu(self.bn41(self.conv41(x3p)))

x42 = F.relu(self.bn42(self.conv42(x41)))

x43 = F.relu(self.bn43(self.conv43(x42)))

x4p, id4 = F.max_pool2d(x43,kernel_size=2, stride=2,return_indices=True)

# Stage 5

x51 = F.relu(self.bn51(self.conv51(x4p)))

x52 = F.relu(self.bn52(self.conv52(x51)))

x53 = F.relu(self.bn53(self.conv53(x52)))

x5p, id5 = F.max_pool2d(x53,kernel_size=2, stride=2,return_indices=True)

# Stage 5d

x5d = F.max_unpool2d(x5p, id5, kernel_size=2, stride=2)

x53d = F.relu(self.bn53d(self.conv53d(x5d)))

x52d = F.relu(self.bn52d(self.conv52d(x53d)))

x51d = F.relu(self.bn51d(self.conv51d(x52d)))

# Stage 4d

x4d = F.max_unpool2d(x51d, id4, kernel_size=2, stride=2)

x43d = F.relu(self.bn43d(self.conv43d(x4d)))

x42d = F.relu(self.bn42d(self.conv42d(x43d)))

x41d = F.relu(self.bn41d(self.conv41d(x42d)))

# Stage 3d

x3d = F.max_unpool2d(x41d, id3, kernel_size=2, stride=2)

x33d = F.relu(self.bn33d(self.conv33d(x3d)))

x32d = F.relu(self.bn32d(self.conv32d(x33d)))

x31d = F.relu(self.bn31d(self.conv31d(x32d)))

# Stage 2d

x2d = F.max_unpool2d(x31d, id2, kernel_size=2, stride=2)

x22d = F.relu(self.bn22d(self.conv22d(x2d)))

x21d = F.relu(self.bn21d(self.conv21d(x22d)))

# Stage 1d

x1d = F.max_unpool2d(x21d, id1, kernel_size=2, stride=2)

x12d = F.relu(self.bn12d(self.conv12d(x1d)))

x11d = self.conv11d(x12d)

return x11d

def load_from_segnet(self, model_path):

s_dict = self.state_dict()# create a copy of the state dict

th = torch.load(model_path).state_dict() # load the weigths

# for name in th:

# s_dict[corresp_name[name]] = th[name]

self.load_state_dict(th)

and my train code

learning_rate = 0.01

num_epochs = 3500

model = SegNet(3,6)

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr = learning_rate, momentum = 0.9, weight_decay = 0.005)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

phase = 'train'

for inputs, labels in dataloaders['train']:

#inputs = inputs.unsqueeze(0)

inputs = inputs.to(device)

labels = labels.to(device)

# zero the parameter gradients

optimizer.zero_grad()

with torch.set_grad_enabled(phase == 'train'):

outputs = model(inputs)

print(outputs.size())

#print(outputs)

#print(labels.size())

#labels = torch.argmax(labels, dim=1)

#print(labels.size())

_, preds = torch.max(outputs, 1)

loss = criterion(outputs, labels)

And i also found a strange thing about my outputs, I think my output pixel value should be the same as that of the MASK:

tensor([[[6, 6, 6, ..., 4, 4, 4],

[6, 6, 6, ..., 4, 4, 4],

[6, 6, 6, ..., 4, 4, 4],

...,

[6, 6, 6, ..., 4, 4, 4],

[6, 6, 6, ..., 4, 4, 4],

[6, 6, 6, ..., 4, 4, 4]],

...

But my outputs:

tensor([[[[ 0.0337, -0.0683, 0.3683, ..., 0.2079, 0.1249, 0.1014],

[ 0.0446, 0.1124, 0.2711, ..., -0.0012, -0.0184, 0.3003],

[ 0.2303, 0.2826, -0.2298, ..., 0.3444, 0.1756, 0.3494],

...,

[ 0.1159, 0.2834, -0.0358, ..., 0.0466, 0.4898, 0.0459],

[ 0.1127, 0.3551, 0.2058, ..., 0.0727, 0.0439, 0.1214],

[-0.1457, 0.2381, -0.2378, ..., 0.3178, -0.0067, 0.1345]],

...

Is this correct?

For 6 classes, your target tensor should contain class indices in the range [0, nb_classes-1] ([0, 5] in your case).