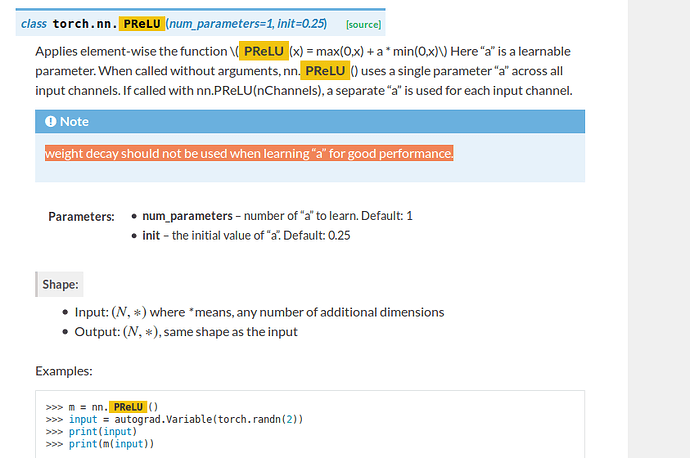

Hi, so I would like to use the PReLU function, however as can be seen here, it warns us that we should not use weight-decay with it.

Now in my code, the weight-decay is being used, and it is called like this:

# Optimizer

optimizer = optim.Adam(net.parameters(),

lr = float(args.learningRate),

weight_decay = float(args.l2_weightDecay)

)

What I do not understand though, is how can I specify explicitly, that I do not want my PReLU layers to be affected by weight decay?

Thanks.