In a linear layer,I store the initial weight and bias in start_weight and start_bias,after training,I store the consequent weight and bias in end_weight and end_bias. And I want to calculate the whole difference value in training.

eg:

difference_weight=end_weight-start_weight

difference_bias=end_bias-start_bias

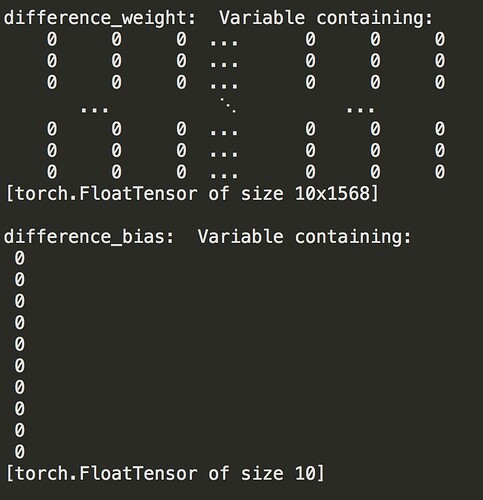

print ('difference_weight: ',difference_weight)

print ('difference_bias: ',difference_bias)

But I only get some ‘0’ as follow, I think the accuracy is low, could you tell me how to calculate it?

Maybe it’s not an issue of accuracy. Are you sure your Tensors are not sharing the underlying data?

Have a look at this example.

In the first case, I create a new Tensor named b, which shares the underlying data with a.

If I manipulate b, the values of a are also changed, thus the difference will be all zeros.

In the second case, I clone the values of c, so that d has it’s own data now.

a = torch.randn(10)

b = a # Share underlying data

b += 10

print(a - b)

c = torch.randn(10)

d = c.clone()

d += 10

print(d - c)

Btw, you can change the print precision with torch.set_printoptions(precision=10), if you need this, although I doubt it’s useful in this case (and for floats in general).

thank you!!! I’ve been exhausted about the problem 3 days, it’s so amazing