I’m using pytorch to train a model as a time-series preditor

my model look likt htis;

import torch

import torch.nn as nn

import torch.nn.init as init

class AttentionModel(nn.Module):

def __init__(self, input_size, hidden_size, num_heads):

super(AttentionModel, self).__init__()

self.linear = nn.Linear(input_size, hidden_size)

init.kaiming_normal_(self.linear.weight, nonlinearity='linear')

init.constant_(self.linear.bias, 0)

self.attention = nn.MultiheadAttention(hidden_size, num_heads, batch_first=True)

# 如果需要自定义注意力层的初始化,可以在这里进行

for p in self.attention.parameters():

if p.dim() > 1:

nn.init.xavier_uniform_(p)

self.fc = nn.Linear(hidden_size * 3, 1)

init.xavier_normal_(self.fc.weight)

init.constant_(self.fc.bias, 0)

def forward(self, x):

# 将输入数据映射到隐藏层

hidden_states = self.linear(x)

# 使用MultiheadAttention

attn_output, _ = self.attention(hidden_states, hidden_states, hidden_states)

# 取出最后一个时间步的输出作为整个序列的表示

# context_vector = attn_output[-1, :, :]

# print(context_vector.size())

# 全连接层输出

flt = attn_output.flatten(1)

# print(flt.size())

output = self.fc(flt)

return output

my data looks like this:

tensor([ 4.6767e+04, 2.7280e+04, 4.6868e+04, 4.6706e+04, 4.6800e+04,

1.3182e+08, 6.3223e+07, 1.3514e+03, 2.8179e+03, 4.6779e+04,

4.6784e+04, -4.0732e+01, -3.6797e+01, -4.2207e+01, 4.7963e+01,

-8.5589e+00, 4.7957e+01, -8.5687e+00, -4.2211e+01, -3.6795e+01,

-3.8831e-03, 6.8489e-03, 1.0001e+02, 1.0732e-02, -7.0962e-02,

9.9963e+01, 9.9975e+01, 4.3305e-01, -1.4060e+00, 2.3138e-01,

1.1266e+00, 1.0921e+00, -1.4012e+00, -1.3839e+00, -1.3821e+00,

-1.3997e+00, 6.9584e-01, 7.2648e-01, -1.3307e+00, -1.3038e+00,

-1.2189e+00, -1.3154e+00, -9.6482e-01, -1.3155e+00, -9.6549e-01,

-1.2189e+00, -1.3041e+00, -1.3487e+00, -1.3384e+00, 1.4074e+00,

4.1653e-01, -1.2966e+00, -1.3047e+00, -1.2857e+00]),

my label look like this:

tensor([46810.2383]))

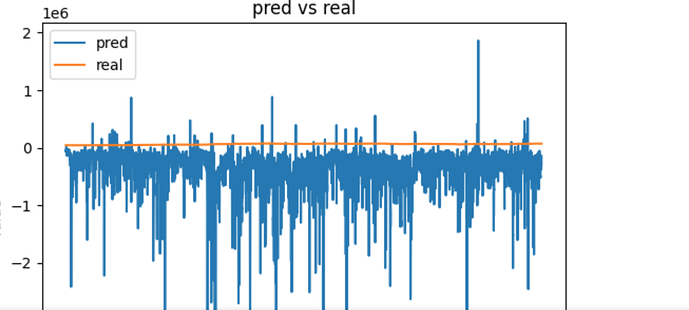

I want to use the three data tot predict the lable. But when I traning my model, the loss is always very big. And I plot the prediction predicted by model and the real label, it looks like this:

test

Traning Loss: 156495051487.5493, Prediction Loss: 289177297578.6667

Can you give me some advices about training?