I am looking for some heads up to train a conventional neural network model with bert embeddings that are generated dynamically (BERT contextualized embeddings which generates different embeddings for the same word which when comes under different context).

In normal neural network model, we would initialize the model with glove or fasttext embeddings like,

import torch.nn as nn

embed = nn.Embedding(vocab_size, vector_size)

embed.weight.data.copy_(some_variable_containing_vectors)

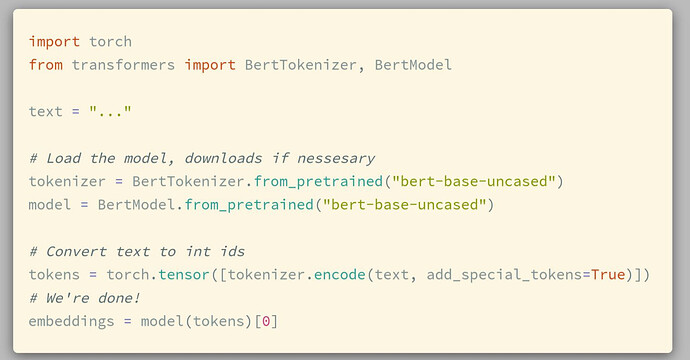

Instead of copying static vectors like this and use it for training, I want to pass every input to a BERT model and generate embedding for the words on the fly, and feed them to the model for training.

So should I work on changing the forward function in the model for incorporating those embeddings?

Any help would be appreciated!