Hi there,

I am currently following the PyTorch transfer learning tutorial in: Transfer Learning for Computer Vision Tutorial — PyTorch Tutorials 2.2.0+cu121 documentation

I have been able to complete tutorial and train on both a CPU and 1 GPU.

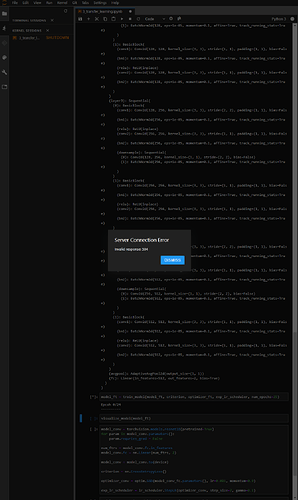

I am utilising Google Cloud Platform Notebook Instances and using 4 NVIDIA Tesla k80 x 4 GPU. It is here that I run into a Server Connection Error (invalid response: 504) error when I train the network on more than 1 GPU

The screen shot of the error is attached

I enable Data Parallelism through the code shown below

model_ft = models.resnet18(pretrained=True)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs, 2)

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

## Using 4 GPUs

if torch.cuda.device_count() > 1:

model_ft = nn.DataParallel(model_ft)

model_ft = model_ft.to(device)

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler, num_epochs=25)

Please advice if I am taking some wrong step in enabling multi GPU with pytorch