I tested the code on Optional: Data Parallelism — PyTorch Tutorials 2.2.0+cu121 documentation. It works well.

But when I changed the code

class Model(nn.Module):

# Our model

def __init__(self, input_size, output_size):

super(Model, self).__init__()

self.fc = nn.Linear(input_size, output_size)

def forward(self, input):

output = self.fc(input)

print("\tIn Model: input size", input.size(),

"output size", output.size())

return output

model = Model(input_size, output_size)

to

model = nn.Linear(input_size, output_size)

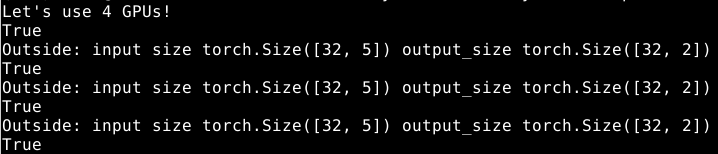

,the result became to

. WHY?