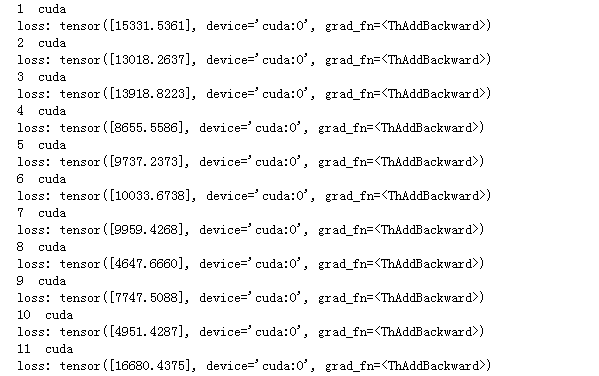

The loss is of the CUDA type, but the CPU usage during the training is 100%, and the speed is very slow, and the GPU is not used at all. What is the cause?

What kind of data and model are you using?

If the model is quite small, the GPU workload might be just too small to see a performance gain over the CPU.

As your code shows the GPU is indeed used.

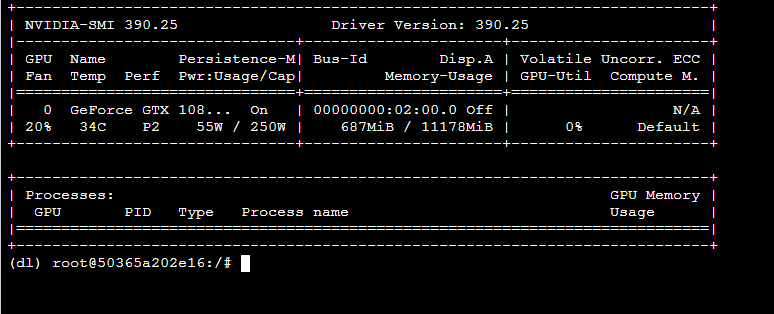

There is a total of 7M of tsv format data, when executed on my PC (CPU only), an epoch is executed in 95 minutes; when executed on the GPU cloud master, the speed does not speed up, and I use the command 'nvidia-smi 'When I look at the GPU usage, I get the following picture.

It don’t show any process using the GPU. I am very confused.I see the same problem of high CPU usage and slow training time for Fast AI examples on google colab with GPU using the pytorch nightly build in the last couple of days. I tried a custom GPU instance on google cloud but that also has the same behavior. I think there is some regression in PyTorch.

Could you share a code snippet which was previously fine and has now this issue? Note that there are indeed still some performance regressions in the current master which are currently being worked on.

I should mention that I am a newbie to Pytorch, so there might be some thing I am missing. Here is what I tried.

- Login to https://colab.research.google.com/notebooks/welcome.ipynb

- Create a new notebook with GPU runtime.

- Install pytorch nightly and fast ai.

!pip install torch_nightly -f https://download.pytorch.org/whl/nightly/cu92/torch_nightly.html

!pip install fastai

- Run the examples/cifar.ipynb notebook.

The time it takes to execute learn.fit_one_cycle is way more than reported in that notebook. I printed the cuda device name and is cuda available calls both calls listed that the gpu is available. During executing the above notebook, I monitored the cpu and gpu usage and the cpu usage was higher and gpu was not used that much even though it did list a running python process.

Hoping you have CUDA toolkit installed properly!

using this

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

net=model().cuda()

My laptop is core i5 with Geforce MX150 2GB and 4GB of RAM

on CPU it took a simple NN about 1min 58sec

on GPU it took 1 min 3sec, CPU usage is less than 50%

Yes I did check that, the collab gpu runtime came with Cuda 9.2 and Tensorflow 1.1. Unfortunately I don’t have a previous baseline for this notebook, the only baseline I have is the number in the original notebook ~7minutes.

Is there a way I can install a previous version of pytorch nightly build, say for ex Oct 5th 2018.

I do not have much knowledge about collab.

This is my system

core i5 8th Gen

4GB RAM

2GB Gefore MX150

OS lubuntu

using a python virtualenv for all installtions

Installed CUDA 10.0 (which is not mentioned on pytorch official website nor does NVIDIA Gefore for CUDA 10 being supported for my GPU MX150) taking a gamble for pure learning purpose.