Unfortunately the GRU (or LSTM) layer model is not learning! I’ve tried many combinations and feeding techniques, but it didn’t learn at all

However, when I replace GRU layer with a GRUCell, the model works fine and solves the environment (in 6 seconds)

** My question is: what’s the difference between GRUCell and GRU Layer? And why this is happening?

=====================================================

Here’s the logic I’m using:

Reinforce algorithm:

1 - initiate the model using random weights

2 - play N full episodes gathering (state, action, reward)

3- for each episode: calculate the discounted rewards for subsequent steps:

𝑄(𝑘,𝑡) = ∑ 𝛾^𝑖 * 𝑟𝑖

4- Calculate the loss function and add entropy to improve exploration:

ℒ = − ∑ 𝑄(𝑘,𝑡) log (𝜋(𝑠(𝑘,𝑡) , 𝑎(𝑘,𝑡)))

5- perform SGD update of weights, minimizing loss

6- repeat until solved

When GRU layer:

a. hn = None at the beginning of each episode t=0

b. hn from t-1 is then fed to the network at step t

=========================================================

Here’s the code. To run it as a GRUCell mode just add --cell argument

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Sat Jun 26 14:39:33 2021

@author: Ayman Al Jabri

"""

import gym

import torch

import torch.nn as nn

import torch.nn.functional as F

import argparse

import numpy as np

from time import time

from collections import namedtuple

from itertools import count

from datetime import datetime, timedelta

Experience = namedtuple('Experience',['state','action','reward'])

class GRULayer(nn.Module):

r"""GRU plain vanila policy gradient model."""

def __init__(self, obs_size, act_size, hid_size=128, num_layers=2):

super().__init__()

self.hid_size = hid_size

self.num_layers = num_layers

self.input = nn.Linear(obs_size, 32)

self.gru = nn.GRU(32, hid_size, num_layers, batch_first=True)

self.output = nn.Linear(hid_size, act_size)

def forward(self, x, hx=None):

r"""Return output and pad the input if observation is less than sequence."""

batch_size = x.size(0)

y = self.input(x)

y = y.view(batch_size, 1, -1)

if hx is None:

hx = torch.zeros((self.num_layers, x.size(0), self.hid_size))

y, hx = self.gru(y, hx)

else:

y, hx = self.gru(y, hx)

y = self.output(F.relu(y.flatten(1)))

return y, hx

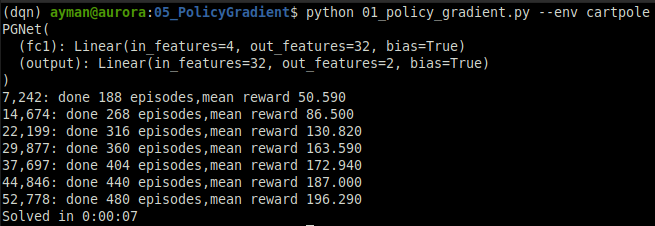

class GRUCell(nn.Module):

r"""Plain vanilla policy gradient network."""

def __init__(self, obs_size, act_size, hid_size=128):

super().__init__()

self.fc1 = nn.GRUCell(obs_size, hid_size)

self.output = nn.Linear(hid_size, act_size, bias=True)

def forward(self, x):

"""Feed forward."""

y = F.relu(self.fc1(x))

return self.output(y)

def discount_rewards(rewards, gamma):

r"""

Summary: calculate the discounted future rewards.

Takes in list of rewards and discount rate

Returns the accumlated future values of these rewards

Example:

>>> r = [1,1,1,1,1,1]

>>> gamma = 0.9

>>> [4.68559, 4.0951, 3.439, 2.71, 1.9, 1.0]

"""

sum_r = 0.0

res = []

for r in reversed(rewards):

sum_r *= gamma

sum_r += r

res.append(sum_r)

return list(reversed(res))

@torch.no_grad()

def generate_eipsodes(env, gamma, cell, n=3):

r"""Yield n number of episodes' observations:(state,action,discounted_rewards,total_rewards,frames)"""

episode = 0

batch_total_rewards = 0

hc = None

act_size = env.action_space.n

states,actions,rewards = [],[],[]

dis_r = []

state = env.reset()

for frame in count():

state_v = torch.FloatTensor([state])

# if np.random.random() > 0.5: # placeholder to injects noise

# state_v.fill_(0.0)

# pass

if cell:

prob = net(state_v) ##Linear

else:

prob, hc = net(state_v, hc) ##GRU

prob = torch.softmax(prob,dim=1).data.cpu().numpy()[0]

action = np.random.choice(act_size, p=prob)

last_state, reward, done, _ = env.step(action)

states.append(state)

actions.append(action)

rewards.append(reward)

if done:

dis_r.extend(discount_rewards(rewards, gamma))

batch_total_rewards += sum(rewards)

rewards.clear()

episode += 1

state=env.reset()

hc = None

if episode ==n:

yield (np.array(states,copy=False),

np.array(actions),

np.array(dis_r),

batch_total_rewards/n,

frame)

states.clear()

actions.clear()

rewards.clear()

dis_r.clear()

batch_total_rewards = 0

episode = 0

state = last_state

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('--cell', action='store_true', help='Use GRUCell instead of GRU layer')

args = parser.parse_args()

ENTROPY_BETA = 0.02

GAMMA = 0.99

HID_SIZE = 64

NUM_LAYERS = 2

SOLVE = 195

LR = 0.01

N_EPS = 1

SEED = 155

env = gym.make('CartPole-v0')

env.seed(SEED)

torch.manual_seed(SEED)

np.random.seed(SEED)

obs_size = env.observation_space.shape[0]

act_size = env.action_space.n

if args.cell:

net = GRUCell(obs_size, act_size, HID_SIZE) #Linear

else:

net = GRULayer(obs_size, act_size,HID_SIZE,NUM_LAYERS) #GRU

print(net)

optimizer = torch.optim.Adam(net.parameters(), lr=LR)

total_rewards = []

print_time = time()

start = datetime.now()

frame = 0

prev_frame = 0

mean = None

for episode,batch in enumerate(generate_eipsodes(env, GAMMA, args.cell, n=N_EPS)):

states, actions, rewards, batch_total_rewards,frame = batch

total_rewards.append(batch_total_rewards)

mean_reward = np.mean(total_rewards[-100:])

if time() - print_time > 1:

speed = (frame - prev_frame)/(time()-print_time)

prev_frame = frame

print(

f"{frame:,}: done {episode} episodes, mean reward {mean_reward:6.3f}, speed:{speed:.0f} fps", flush=True)

print_time = time()

if mean_reward > SOLVE:

duration = timedelta(seconds=(datetime.now()-start).seconds)

print(f'Solved in {duration}')

break

### training ###

states_v = torch.FloatTensor(states)

batch_scale_v = torch.FloatTensor(discount_rewards(rewards, GAMMA))

actions_v = torch.LongTensor(actions)

optimizer.zero_grad()

# policy loss

if args.cell:

logit = net(states_v) #Linear

else:

logit, hn = net(states_v) ##GRU

log_p = F.log_softmax(logit, dim=1)

# Gather probabilities with taken actions

log_p_a = batch_scale_v * log_p[range(len(actions)), actions]

policy_loss = - log_p_a.mean()

# entropy loss

probs_v = F.softmax(logit, dim=1)

entropy = - (probs_v * log_p).sum(dim=1).mean()

entropy_loss = - ENTROPY_BETA * entropy

#total loss

loss = policy_loss + entropy_loss

loss.backward()

optimizer.step()