I have 288 images, and i am using batch size of 4. So I think my steps_per_epoch is 288//4.

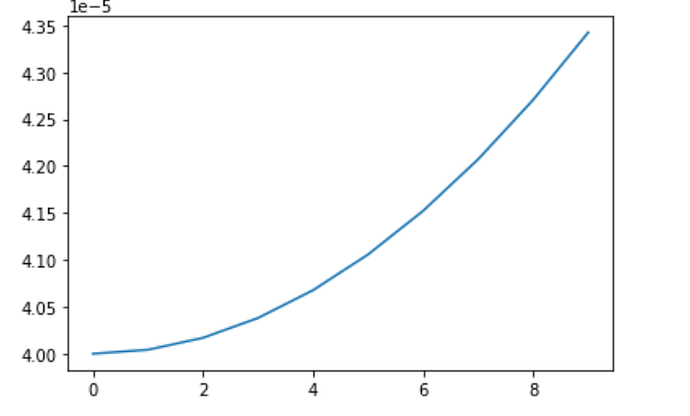

I have plotted LR as follow

import matplotlib.pyplot as plt

opt = torch.optim.Adam(model.parameters(), lr=1e-4,weight_decay=1e-4)

scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer,pct_start=0.33, max_lr=1e-3,steps_per_epoch=288//4, epochs=10,last_epoch =-1)

lrs = []

for i in range(10):

optimizer.step()

lrs.append(optimizer.param_groups[0]["lr"])

scheduler.step()

plt.plot(lrs)

I am getting as follow

but this graph is not showing the lowering of the learning rate