Hi all,

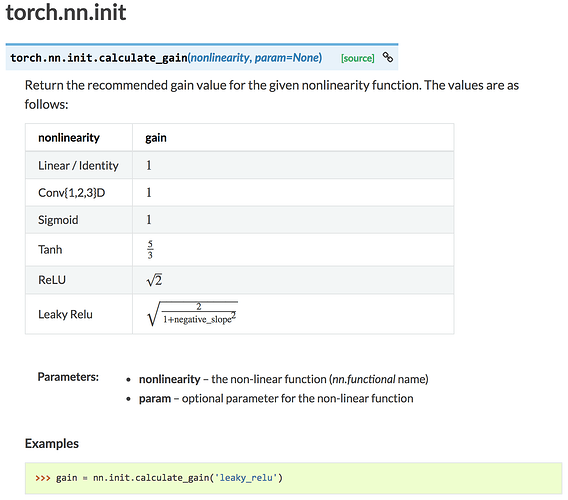

According to the official document as follows:

I am quite confused about the choice of the parameter called “nonlinearity”:

-

If I have a network structure like this:

(Conv2D → BN → LeakyReLU) → (Conv2D → BN → LeakyReLU) → (Conv2D → BN → LeakyReLU)How do I choose which option to use? Should it be:

nn.init.xavier_normal_(m.weight.data, gain=nn.init.calculate_gain('conv2d'))or

nn.init.xavier_normal_(m.weight.data, gain=nn.init.calculate_gain('leaky_relu'))?

-

What about if I have RNN layers in my network (maybe GRU or LSTM)?

Should it use “sigmoid” due to the ouputs of GRU & LSTM are activated by sigmoid function?nn.init.xavier_normal_(m.weight.data, gain=nn.init.calculate_gain('sigmoid'))Or the “tanh” may be the better one as follow?

nn.init.xavier_normal_(m.weight.data, gain=nn.init.calculate_gain('tanh')) -

Is it good enough to use the default parameter for all kinds of layers?

(no matter Conv{1, 2, 3}D, RNN, etc.)

Many thanks!