Hi All,

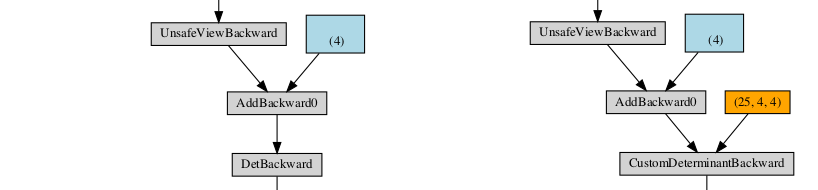

I was just wondering if it’s possible to visualize the backward pass (including the shape of the Tensors in the computation). This is because I’ve been writing my own custom Autograd function with a custom Backward and custom DoubleBackward and when I run my network, I get a mismatch error when using my custom function. So, clearly, I’ve miscalculated the shape of some Tensor which will result in a mismatch.

For example, I get the following error when running my code with my custom function,

[W python_anomaly_mode.cpp:60] Warning: Error detected in CustomDeterminantBackward. Traceback of forward call that caused the error:

File "file.py", line 307, in <module>

myLocal = calc_local_energy(myNet, myX)

File "file.py", line 236, in calc_local_energy

kinetic = calc_kinetic_energy(Net, x)

File "file.py", line 214, in calc_kinetic_energy

psi_walker = Net(x_walker)

File "/home/user/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "file.py", line 180, in forward

Psi = self.slater_det(A)

File "/home/user/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py", line 722, in _call_impl

result = self.forward(*input, **kwargs)

File "file.py", line 152, in forward

return CustomDeterminant.apply(A)

(function print_stack)

Traceback (most recent call last):

File "file.py", line 318, in <module>

loss.backward()

File "/home/user/anaconda3/lib/python3.7/site-packages/torch/tensor.py", line 185, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph)

File "/home/user/anaconda3/lib/python3.7/site-packages/torch/autograd/__init__.py", line 127, in backward

allow_unreachable=True)

RuntimeError: The size of tensor a (2500) must match the size of tensor b (4) at non-singleton dimension 2

The significance of size (2500) and size (4) is that 2500 corresponds to the number in my batch, and 4 corresponds to the number of input nodes. So, I pass a Tensor of size (2500, 4) into a feed-forward like network that returns a single value.

I would assume that the error’s somewhere within my CustomDeterminantBackward class (as PyTorch states in the python_anomaly_mode warning at the top of the trace), although not much information is given as to where precisely this mismatch in dims is occurring between Tensor a and b. Is there an easy way to track the shapes of the Tensor as they are called within the backward pass?

Thanks in advance, and hopefully this question makes sense!