When I print batch , I get:

batch

[tensor([[[-0.0579, 0.0439, 0.0658, …, -0.0565, 0.0413, -0.0023]],

[[ 0.9421, 0.8119, 0.6808, ..., 0.0039, 0.0252, 0.0431]],

[[ 0.7007, 0.5435, 0.2290, ..., -0.1226, 0.0346, -0.0850]],

...,

[[ 0.9834, 0.4084, 0.5638, ..., 0.0261, -0.0626, 0.0173]],

[[ 0.8450, 1.0068, 0.9290, ..., -0.0277, 0.0607, 0.0565]],

[[ 1.0653, 0.6558, 0.4438, ..., 0.0670, 0.1114, -0.0131]]]),

tensor([2, 3, 1, 1, 2, 3, 2, 4, 3, 1, 0, 2, 3, 0, 1, 2, 4, 2, 1, 2, 4, 1, 2, 0, 3, 1, 4, 2, 1, 4, 2, 3])]

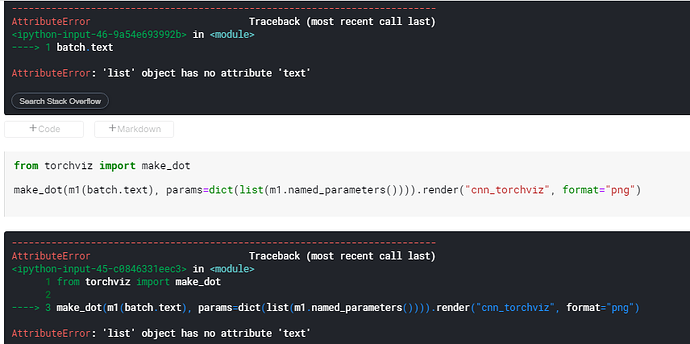

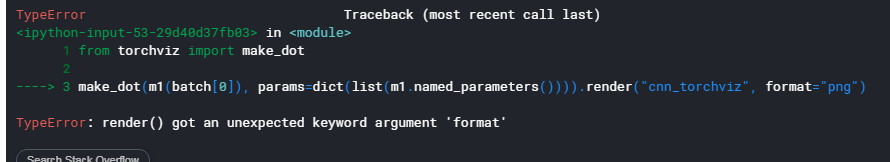

Also on removing .text part:

from torchviz import make_dot

make_dot(m1(batch), params=dict(list(m1.named_parameters()))).render(“cnn_torchviz”, format=“png”)

I GET ERROR:

TypeError Traceback (most recent call last)

in

1 from torchviz import make_dot

2

----> 3 make_dot(m1(batch), params=dict(list(m1.named_parameters()))).render(“cnn_torchviz”, format=“png”)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

→ 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

in forward(self, input)

36 #input = input.unsqueeze(1)

37 #input = input.unsqueeze(0)

—> 38 x = self.conv1(input)

39 x = self.conv2(x)

40 x = self.conv3(x)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

→ 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

in forward(self, input)

52 #print(“INPUT SHAPE SKIP”)

53 #print(input.shape)

—> 54 conv1 = self.conv_1(input)

55 x = self.normalization_1(conv1)

56 x = self.swish_1(x)

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

→ 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

/opt/conda/lib/python3.7/site-packages/torch/nn/modules/conv.py in forward(self, input)

257 _single(0), self.dilation, self.groups)

258 return F.conv1d(input, self.weight, self.bias, self.stride,

→ 259 self.padding, self.dilation, self.groups)

260

261

TypeError: conv1d(): argument ‘input’ (position 1) must be Tensor, not list