muhibkhan

October 16, 2019, 1:15pm

1

I don’t understand how torch.norm() behave and it calculates the L1 loss and L2 loss? When p=1, it calculates the L1 loss, but on p=2 it fails to calculate the L2 loss…

Can somebody explain it?

a, b = torch.rand((2,2)), torch.rand((2,2))

var1 = torch.sum(torch.abs((a * b)), 1)

var2 = torch.norm(((a * b)), 1, -1)

var3 = torch.sum(((a * b)) ** 2, 1)

var4 = torch.norm(((a * b)), 2, -1)

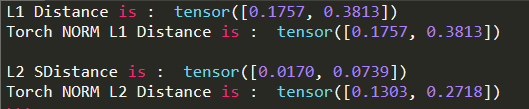

And the computed output is as:

import torch

a, b = torch.rand((2,2)), torch.rand((2,2))

var1 = torch.sum(torch.abs((a * b)), 1)

print("L1 Distance is : ", var1)

var2 = torch.norm(((a * b)), 1, -1)

print("Torch NORM L1 Distance is : ", var2)

var3 = torch.sum(((a * b)) ** 2, 1).sqrt()

print("L2 SDistance is : ", var3)

var4 = torch.norm(((a * b)), 2, -1)

print("Torch NORM L2 Distance is : ", var4)

Your code was wrong.

L1 Distance is : tensor([0.0924, 0.2528])

Torch NORM L1 Distance is : tensor([0.0924, 0.2528])

L2 SDistance is : tensor([0.0893, 0.2327])

Torch NORM L2 Distance is : tensor([0.0893, 0.2327])

4 Likes

muhibkhan

October 17, 2019, 9:56am

3

THANKS @JuanFMontesinos . Its a big help.