Hi @ptrblck,

tried all the possibilities, even followed the suggestion given by @done1892 from What is the weight values mean in torch.nn.CrossEntropyLoss? - #10 by done1892 for BCEwithlogitsloss.

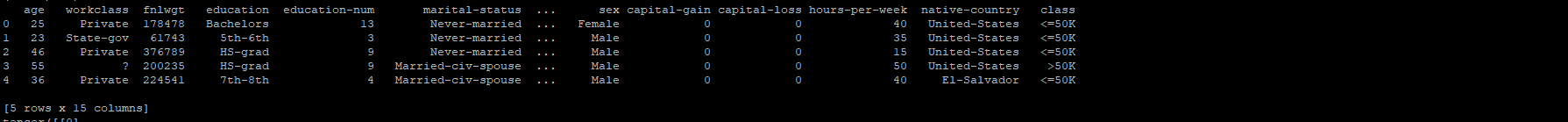

This is my classification ratio in training set.

<=50K 29705

(greater than)50K 9369

Name: class, dtype: int64

so i have tried with pos_ratio of 3.17 and 1/3.17 too… but still none of the confusion_matrix or accuracy didnt changed a bit.

Here is my code:

weights = [[2.5],[2.55],[2.6],[2.65],[2.7],[2.75],[2.8],[2.85],[2.9],[2.95],[3],[3.05],[3.1],[3.15],[3.2],[3.25],[3.3],[3.35],[3.4],[3.45],[3.5],[3.55],[3.6],[3.65],[3.7],[3.75],[3.8],[3.85],[3.9],[3.94999999999999],[3.99999999999999],[4.04999999999999],[4.09999999999999],[4.14999999999999],[4.19999999999999],[4.24999999999999],[4.29999999999999],[4.34999999999999],[4.39999999999999],[4.44999999999999],[4.49999999999999],[4.54999999999999],[4.59999999999999],[4.64999999999999],[4.69999999999999],[4.74999999999999],[4.79999999999999],[4.84999999999999],[4.89999999999999],[4.94999999999999],[4.99999999999999],[5.04999999999999],[5.09999999999999],[5.14999999999999],[5.19999999999999],[5.24999999999999],[5.29999999999999],[5.34999999999999],[5.39999999999999],[5.44999999999999],[5.49999999999999],[5.54999999999999],[5.59999999999999],[5.64999999999999],[5.69999999999999],[5.74999999999999],[5.79999999999999],[5.84999999999999],[5.89999999999999],[5.94999999999999],[5.99999999999999],[6.04999999999999],[6.09999999999999],[6.14999999999999],[6.19999999999999],[6.24999999999999],[6.29999999999999],[6.34999999999999],[6.39999999999999],[6.44999999999999],[6.49999999999999],[6.54999999999999],[6.59999999999999],[6.64999999999999],[6.69999999999999],[6.74999999999998],[6.79999999999998],[6.84999999999998],[6.89999999999998],[6.94999999999998],[6.99999999999998],[7.04999999999998],[7.09999999999998],[7.14999999999998],[7.19999999999998],[7.24999999999998],[7.29999999999998],[7.34999999999998],[7.39999999999998],[7.44999999999998],[7.49999999999998],[7.54999999999998],[7.59999999999998],[7.64999999999998],[7.69999999999998],[7.74999999999998],[7.79999999999998],[7.84999999999998],[7.89999999999998],[7.94999999999998],[7.99999999999998],[8.04999999999998],[8.09999999999998],[8.14999999999998],[8.19999999999998],[8.24999999999998],[8.29999999999998],[8.34999999999998],[8.39999999999998],[8.44999999999998],[8.49999999999998],[8.54999999999998],[8.59999999999998],[8.64999999999998],[8.69999999999998],[8.74999999999998],[8.79999999999998],[8.84999999999998],[8.89999999999998],[8.94999999999998],[8.99999999999998],[9.04999999999998],[9.09999999999998],[9.14999999999998],[9.19999999999998],[9.24999999999998],[9.29999999999998],[9.34999999999998],[9.39999999999998],[9.44999999999998],[9.49999999999998],[9.54999999999997],[9.59999999999997],[9.64999999999997],[9.69999999999997],[9.74999999999997],[9.79999999999997],[9.84999999999997],[9.89999999999997],[9.94999999999997],[9.99999999999997],[10.05],[10.1],[10.15],[10.2],[10.25],[10.3],[10.35],[10.4],[10.45],[10.5],[10.55],[10.6],[10.65],[10.7],[10.75],[10.8],[10.85],[10.9],[10.95],[11],[11.05],[11.1],[11.15],[11.2],[11.25],[11.3],[11.35],[11.4],[11.45],[11.5],[11.55],[11.6],[11.65],[11.7],[11.75],[11.8],[11.85],[11.9],[11.95],[12],[12.05],[12.1],[12.15]

]

rate = [0.001,0.005,0.009,0.013,0.017,0.021,0.025,0.029,0.033,0.037,0.041,0.045,0.049,0.053,0.057,0.061,0.065,0.069,0.073,0.077]

for lr in rate:

for wg in weights:

#Create Model object

model = Model(categorical_embedding_sizes, numerical_data.shape[1], 1, [512,128,16], p=0.4)

#Create loss and optimizer function

#Add Weights

#weights = [3.17,1.0]

#class_weights = torch.FloatTensor(weights)

#loss_function = nn.CrossEntropyLoss(weight=class_weights)

class_weight = torch.FloatTensor(wg)

loss_function = nn.BCEWithLogitsLoss(pos_weight=class_weight)

optimizer = torch.optim.Adam(model.parameters(), lr=0.001)

#Train the model

epochs = ep

aggregated_losses = []

for i in range(epochs):

i += 1

y_pred = model(categorical_train_data, numerical_train_data)

#print(y_pred[:5])

#print(y_pred.shape, train_outputs.shape)

#out, inds = torch.max(y_pred,dim=1)

#out = out.unsqueeze(1)

train_outputs = train_outputs.float()

single_loss = loss_function(y_pred, train_outputs)

#single_loss = loss_function(y_pred, torch.max(train_outputs,1)[1])

aggregated_losses.append(single_loss)

#if i%25 == 1:

# print(f'epoch: {i:3} loss: {single_loss.item():10.8f}')

optimizer.zero_grad()

single_loss.backward()

optimizer.step()

print(f'epoch: {i:3} loss: {single_loss.item():10.10f}')

#plt.plot(range(epochs), aggregated_losses)

#plt.ylabel('Loss')

#plt.xlabel('epoch');

##Make Predictions

with torch.no_grad():

y_val = model(categorical_test_data, numerical_test_data)

#out, inds = torch.max(y_val,dim=1)

#out = out.unsqueeze(1)

test_outputs = test_outputs.float()

loss = loss_function(y_val, test_outputs)

#loss = loss_function(y_val, torch.max(test_outputs,1)[1])

print(f'Validation Loss: {loss:.8f}')

#Convert output values to either 0 or 1

y_val = np.argmax(y_val, axis=1)

#print(y_val[:5])

print(confusion_matrix(test_outputs,y_val))

#print(classification_report(test_outputs,y_val))

print(accuracy_score(test_outputs, y_val))

please take a look and advice.