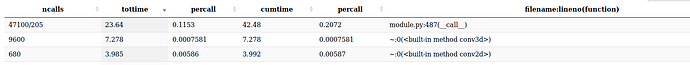

Hi, I’m trying to serialize my model using TorchScript. Since there is no control flow in the module, I have decided to just trace the module. But when I profile the script I see the weird picture (Profiling is done in sync mode CUDA_LAUNCH_BLOCKING=1):

Overall running time has been increased and there is a huge overhead when calling the __call__ method. What is the cause of the issue? In my big model I have only traced one module and there are subsequent modules following it.

The module that I’m trying to serialize is the Pose ResNet.