I am trying to Finetune BertForSequenceClassification on custom dataset with Dsitributed training using Accelerate. Runtime environment is AWS Sagemaker notebook with 4 GPUs and 24 GB RAM.

Executed my code earlier for one step to ensure batch size does not cause memory issues and that I get train/val errors.

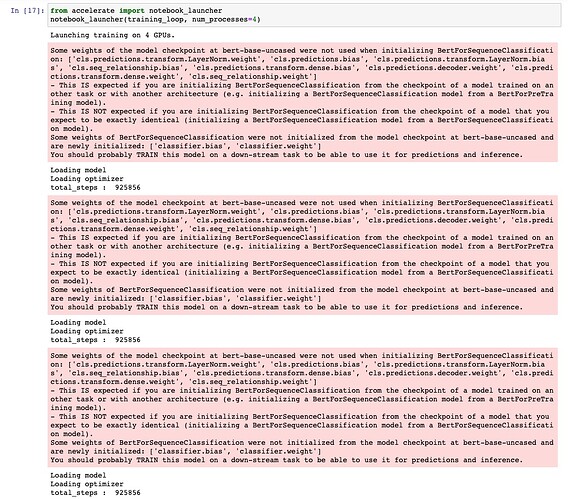

But notebook launcher now fails to execute the code, the cell simply completes execution without executing a single step of training.

def training_loop():

model_path = "models/category_classifier_bert.pt"

accelerator = Accelerator(mixed_precision="fp16")

device = accelerator.device

model = BertForSequenceClassification.from_pretrained("bert-base-uncased",num_labels=NUM_LABELS)

print("Loading model ")

learning_rate = 1e-5

optimizer = AdamW(model.parameters(), lr=learning_rate)

print("Loading optimizer")

train_dl, val_dl = get_dataloaders()

total_steps = int((len(train_dl) * EPOCHS)/4*BATCH_SIZE)

print("total_steps : ",total_steps)

# Set up the learning rate scheduler

scheduler = get_linear_schedule_with_warmup(optimizer,

num_warmup_steps=100, # Default value

num_training_steps=total_steps)

print("Loading model on accelerators .... ")

model, optimizer, train_dl, val_dl, scheduler = accelerator.prepare(model, optimizer, train_dl, val_dl, scheduler)

#val_dl = accelerator.prepare(val_dl)

metric = Accuracy(task="multiclass", num_classes=NUM_LABELS).to(device)

# MulticlassAccuracy(num_classes=NUM_LABELS).to(device)

print("Training ... ")

for epoch in range(EPOCHS):

model.train()

for step,batch in enumerate(train_dl):

input_ids = batch["input_ids"].to(device)

targets = batch["label"].to(device)

attention_mask = batch["attention_mask"].to(device)

optimizer.zero_grad()

output = model(input_ids, attention_mask)

loss = CrossEntropyLoss(output.logits, targets)

accelerator.backward(loss)

optimizer.step()

if step%5000 == 0:

print(step, loss)

accelerator.wait_for_everyone()

unwrapped_model = accelerator.unwrap_model(model)

accelerator.save(unwrapped_model.state_dict(), model_path)

from accelerate import notebook_launcher

notebook_launcher(training_loop, num_processes=4)

The cell completes execution with out performing a single step of training. Are there any cache or buffer issues that I needto take care of ? From the output, it appears that NOT all the GPUs are identified and hence the cell execution terminates before completing execution.