A nice intro to doing hyperparameter optimization for pytorch models.

Thanks for sharing!

Do you have any comparison between Weights & Biases Sweep and e.g. Optuna?

Hi thanks for your question!

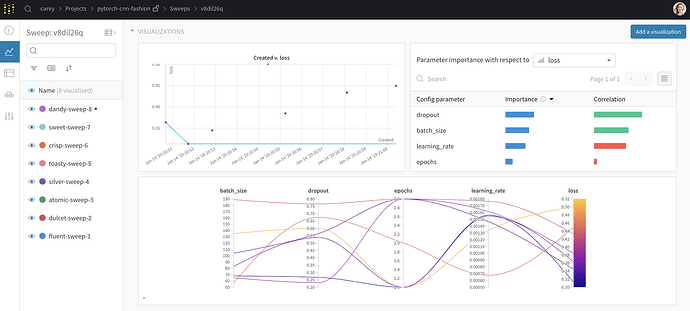

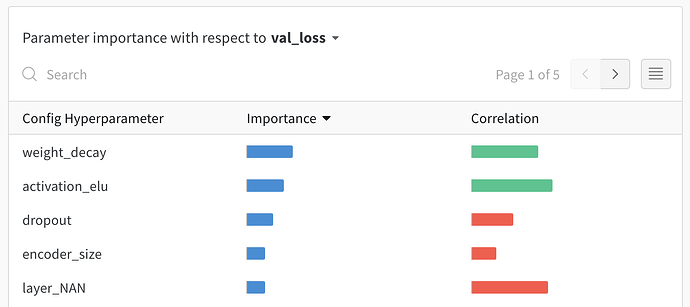

W&B supports all the features Optuna has (defining sophisticated parameter spaces, saving/resuming, distributed optimization) and also has more advanced search strategies like hyperopt. It also generates visualizations that help see which hyperparameters were the most important and worth digging into more.

Here’s an example sweeps workspace if you’d like to play with them.

1 Like