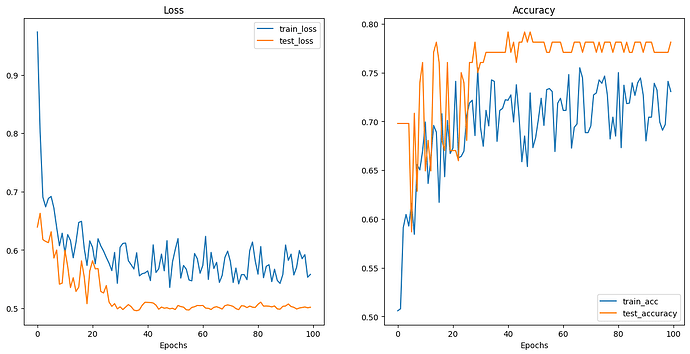

I a beginner in Deep Learning, especially CNN. I have a dataset of the handwritten words - “TRUE”, “FALSE” and “NONE”.I want my model to recognize the words and put them in the correct class. I created a simple CNN model copying the TinyVGG architecture. However, the loss and accuracy values are the same for all epochs. I tried changing the batch size, epochs and learning rate and it’s not working. Since I am new to this concept and this is being my first project I do not know what changes to be made to the model.

class CUSTOMDATASETV2(nn.Module):

def __init__(self, input_shape: int, hidden_units: int, output_shape: int):

super().__init__()

self.block_1 = nn.Sequential(

nn.Conv2d(in_channels=input_shape,

out_channels=hidden_units,

kernel_size=3, # how big is the square that's going over the image?

stride=1, # default

padding=1),# options = "valid" (no padding) or "same" (output has same shape as input) or int for specific number

nn.ReLU(),

nn.Conv2d(in_channels=hidden_units,

out_channels=hidden_units,

kernel_size=3,

stride=1,

padding=1),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2,

stride=2) # default stride value is same as kernel_size

)

self.block_2 = nn.Sequential(

nn.Conv2d(hidden_units, hidden_units, 3, padding=1),

nn.ReLU(),

nn.Conv2d(hidden_units, hidden_units, 3, padding=1),

nn.ReLU(),

nn.MaxPool2d(2)

)

self.classifier = nn.Sequential(

nn.Flatten(),

# Where did this in_features shape come from?

# It's because each layer of our network compresses and changes the shape of our inputs data.

nn.Linear(in_features=hidden_units*16*16,

out_features=output_shape)

)

def forward(self, x: torch.Tensor):

x = self.block_1(x)

# print(x.shape)

x = self.block_2(x)

# print(x.shape)

x = self.classifier(x)

# print(x.shape)

return x

torch.manual_seed(42)

model_2 = CUSTOMDATASETV2(input_shape=3,

hidden_units=10,

output_shape=len(class_names)).to(device)

model_2

x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x-x

from tqdm.auto import tqdm

torch.manual_seed(42)

# Measure time

from timeit import default_timer as timer

train_time_start_model_2 = timer()

# Train and test model

epochs = 10

for epoch in tqdm(range(epochs)):

print(f"Epoch: {epoch}\n---------")

train_step(data_loader=train_dataloader,

model=model_2,

loss_fn=loss_fn,

optimizer=optimizer,

accuracy_fn=accuracy_fn,

device=device

)

test_step(data_loader=test_dataloader,

model=model_2,

loss_fn=loss_fn,

accuracy_fn=accuracy_fn,

device=device

)

train_time_end_model_2 = timer()

total_train_time_model_2 = print_train_time(start=train_time_start_model_2,

end=train_time_end_model_2,

device=device)