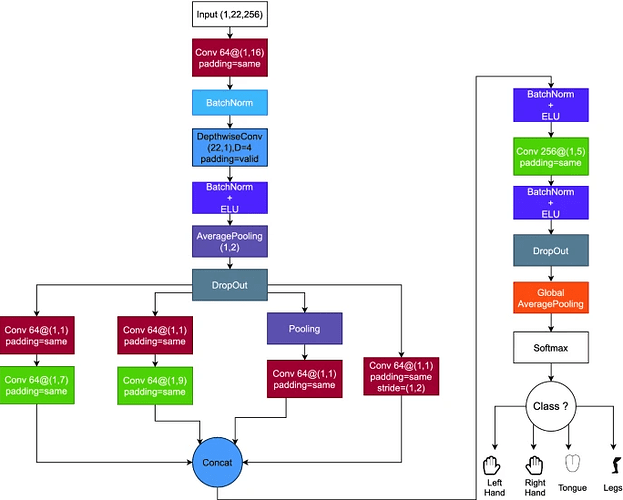

I’m creating a model like the one in the figure above, but concat doesn’t match the size and I get an error. RuntimeError: Sizes of tensors must match except in dimension 1. Expected size 128 but got size 64 for tensor number 2 in the list.

How can I create one?

import torch

from torch import nn

import torch.nn.functional as F

class DepthwiseConv2d(torch.nn.Conv2d):

def __init__(self,

in_channels,

depth_multiplier=1,

kernel_size=3,

stride=1,

padding=0,

dilation=1,

bias=True,

padding_mode='zeros'

):

out_channels = in_channels * depth_multiplier

super().__init__(

in_channels=in_channels,

out_channels=out_channels,

kernel_size=kernel_size,

stride=stride,

padding=padding,

dilation=dilation,

groups=in_channels,

bias=bias,

padding_mode=padding_mode

)

class InceptionEEGNet(nn.Module):

def __init__(self,bathsize): # input = (1,22,256)

super().__init__()

self.bathsize = bathsize

self.conv2d_1 = nn.Conv2d(1,64,(1,16),padding='same')

self.batchnorm2d_1 = nn.BatchNorm2d(64)

self.conv2d_2 = DepthwiseConv2d(64,depth_multiplier=4,kernel_size=(22,1),padding='valid')

self.batchnorm2d_elu_1 = nn.Sequential(

nn.BatchNorm2d(256),

nn.ELU()

)

self.averagepooling = nn.Sequential(

nn.AvgPool2d((1,2)),

nn.Dropout2d(p=0.5)

)

self.inception_block_1 = nn.Sequential(

nn.Conv2d(256,64,(1,1),padding='same'),

nn.Conv2d(64,64,(1,7),padding='same'),

)

self.inception_block_2 = nn.Sequential(

nn.Conv2d(256,64,(1,1),padding='same'),

nn.Conv2d(64,64,(1,9),padding='same'),

)

self.inception_block_3 = nn.Sequential(

nn.AvgPool2d((1,2)),

nn.Conv2d(256,64,(1,1),padding='same'),

)

self.inception_block_4 = nn.Conv2d(256,64,(1,1),stride=(1,2))

self.batchnorm2d_elu_2 = nn.Sequential(

nn.BatchNorm2d(64),

nn.ELU(),

nn.Dropout2d(p=0.5)

)

self.conv2d_3 = nn.Conv2d(256,256,(1,5),padding='same')

def forward(self,x):

x = self.conv2d_1(x)

x = self.batchnorm2d_1(x)

x = self.conv2d_2(x)

x = self.batchnorm2d_elu_1(x)

x = self.averagepooling(x)

print(x.shape)

x1 = self.inception_block_1(x)

print(x1.shape)

x2 = self.inception_block_2(x)

x3 = self.inception_block_3(x)

x4 = self.inception_block_4(x)

x = torch.cat((x1, x2, x3, x4), 1)

x = self.batchnorm2d_elu_2(x)

x = self.conv2d_3(x)

x = self.batchnorm2d_elu_2(x)

x = F.adaptive_avg_pool3d(x, (1,1,3))

x = x.squeeze()

return x

net = InceptionEEGNet(10)

x = torch.rand(10,1,22,256)

print(net(x).shape)