I’m studying RNN and pytorch.

I just want to predict integer sequence.

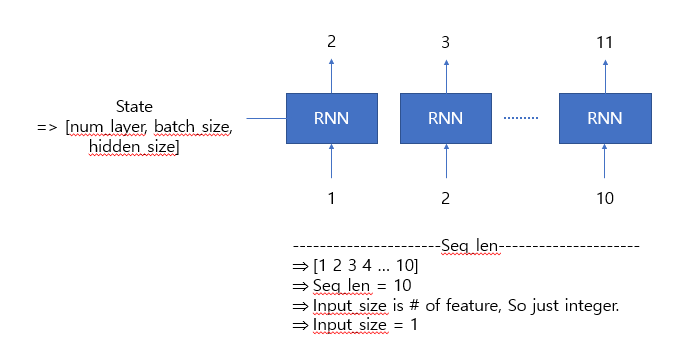

This picture shows what i want to do and my idea.

please help me.

I think my concept is wrong.

I want to fix it.

class myRNN(nn.Module):

def __init__(self, input_size, hidden_size, num_layer):

super(myRNN, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layer = num_layer

self.rnn1 = nn.RNN(input_size, hidden_size, num_layer)

#input_size, hidden_size, num_layer

#hidden_size 는 한 layer내의 block size

def forward(self, input, hidden):

output, hidden = self.rnn1(input, hidden)

return output, hidden

def init_hidden(self):

hidden = Variable(torch.zeros(num_layer, batch_size, hidden_size))

return hidden

if __name__ == '__main__':

seq_len = 10

input_size = 1

hidden_size = 10

num_layer = 1

batch_size = 1

#

rnn = myRNN(input_size, hidden_size, num_layer)

#loss, optimizer

optimizer = torch.optim.Adam(rnn.parameters(), lr = 0.0001)

example = [1,2,3,4,5,6,7,8,9,10,11]

input_data = Variable(torch.FloatTensor(example[:-1]).view(seq_len, batch_size, input_size))

label = Variable(torch.FloatTensor(example[1:]).view(seq_len, batch_size, input_size))

print(input_data.size(), label.size())

hidden = rnn.init_hidden()

print(hidden.size())

hidden = rnn.init_hidden()

loss = 0

optimizer.zero_grad()

output, hidden = rnn(input_data, hidden)

print('output / label' , output.size(), label.size())

loss = nn.functional.nll_loss(output, label)

loss.backward()

optimizer.step()

print(loss)

(** PS **) After I implement the RNN with another code(not the above code), i got a output with float not integer.

-0.9967 -0.4590 0.9998 -0.9367 0.5538 -1.0000 1.0000 -1.0000 1.0000