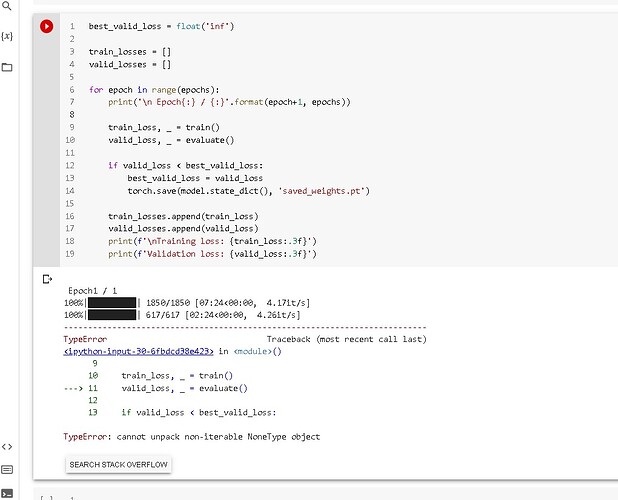

When the model is trained after evaluating the validation loss, this error occurs: TypeError: cannot unpack non-iterable NoneType object

Why does this error occur? What do I need to fix? Thank you!

Here is code:

def train():

model.train()

total_loss, total_accuracy = 0, 0

total_preds = []

for step, batch in tqdm(enumerate(train_dataloader), total = len(train_dataloader)):

batch = [r.to(device) for r in batch]

sent_id,mask,labels = batch

model.zero_grad()

preds = model(sent_id, mask)

loss = cross_entropy(preds, labels)

total_loss += loss.item()

loss.backward()

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

optimizer.step()

preds = preds.detach().cpu().numpy()

total_preds.append(preds)

avg_loss = total_loss / len(train_dataloader)

total_preds = np.concatenate(total_preds, axis = 0)

return avg_loss, total_preds

def evaluate():

model.eval()

total_loss, total_accuracy = 0,0

total_preds = []

for step, batch in tqdm(enumerate(val_dataloader), total = len(val_dataloader)):

batch = [t.to(device) for t in batch]

sent_id, mask, labels = batch

with torch.no_grad():

preds = model(sent_id, mask)

loss = cross_entropy(preds, labels)

total_loss = total_loss + loss.item()

preds = preds.detach().cpu().numpy()

total_preds.append(preds)

avg_loss = total_loss / len(val_dataloader)

total_preds = np.concatenate(total_preds, axis = 0)

best_valid_loss = float('inf')

train_losses = []

valid_losses = []

for epoch in range(epochs):

print('\n Epoch{:} / {:}'.format(epoch+1, epochs))

train_loss, _ = train()

valid_loss, _ = evaluate()

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), 'saved_weights.pt')

train_losses.append(train_loss)

valid_losses.append(valid_loss)

print(f'\nTraining loss: {train_loss:.3f}')

print(f'Validation loss: {valid_loss:.3f}')